TITAA #34: Latent and/or Beautiful Bodies

Artists' Models - Scary Cults - Stable Hacking - So Many Game Links

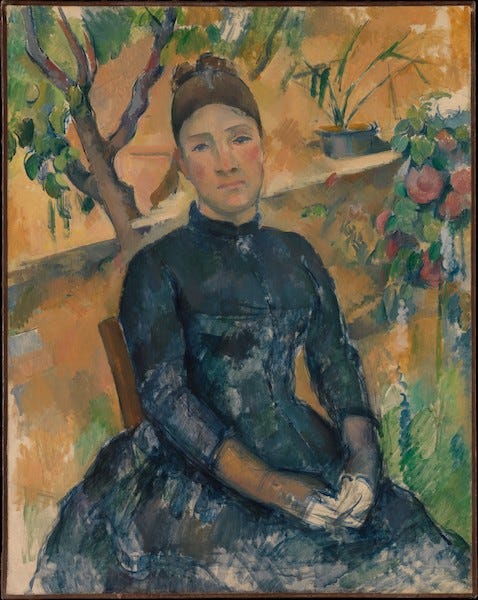

There’s an exhibit at the Tate Modern on Cézanne, who is considered by most to be “post-impressionist” and a strong influence on the following modernists, such as Picasso, Braque, Matisse. He’s kind of an artist’s artist. I admit to not being a big fan, myself. The discourse is interesting, though, as is the discourse about his wife.

The Guardian recap interested me because of how these artists interpreted his work:

But Cézanne dwells like a fetishist on individual things that he grasps with a mental fist. This twists the ancient genre of the still life into something much more atomised and brutal. It led within a few years of his death to Marcel Duchamp inventing the “readymade”, asserting that an artist doesn’t have to paint or sculpt but can simply “choose” something, just as Cézanne chose his pieces of fruit.

Fun art fact: in French, “still life” is “dead nature” (“nature morte”).

The article doesn’t touch on the many portraits of his wife, who apparently after himself, was his most popular subject (29 paintings!). Her name was Hortense Fiquet, and I accidentally edited it out of the email version of this I sent, which is appalling. Cézanne seems to have dwelt on her like a fetishist, despite saying terrible things about her. English Wikipedia is nasty about her, calling her “high maintenance.” Yet it seems to be the accepted narrative. Even the fact that he paints her unsmiling gets tallied against her (n.b., he paints no one smiling).

Cézanne was supported by his rich father as he pursued his career. She was an artists’ model when he met her, they had a son together and lived unmarried for 20 years before finally marrying, and then separating soon after. Everyone rejected her as his partner before and after, and he dis-inherited her on his deathbed. The story is she was fashion-obsessed and was at a dress shop appointment as he died. Yet, despite their separation, he kept painting her, which seems to me to say something about her patience. The painting above is well after their separation.

Zola immortalized them in a book about an unstable artist, who is abominable to his artist wife. This fab article about the invisible interior of Madame Cézanne offers a brutal anecdote:

Near the end of Zola’s L’Œuvre, the fictional artist struggles to paint his masterpiece—a picture of Paris centered upon a grand nude for which Christine models. When the artist fails to achieve his vision he turns viciously upon Christine and blames the painting’s failure upon her failure to model as the grand nude. Time takes its toll on Christine. Her sagging flesh, the artist argues, sabotages his success as a painter. “Not very lovely, is it?” the painter remarks after directing Christine to look in a mirror.

If you liked this side trip into artists’ model wives, modernism, and bodies, I highly recommend the poem at the end of this newsletter. And now back to the tech.

AI Art Tools

It’s madness out there, it’s impossible to keep up! Certainly impossible if you also have a job or two 🤔. Stable Diffusion text2image, due to the open-sourcing of the model and an API, still ruled this month’s news in terms of the creative applications and eco-system growing around it. But the past few days also saw new text2THING model releases (see below, I wish for in-page links).

Various other platforms for text2image supplied minor news; OpenAI’s DALLE-2 now has no waitlist and now supports out-painting without needing back-and-forth with image editors.

Get Me Into Stable Image Creation, You Say!

Dreamstudio has added in- and out- painting and init images via their web UI. You pay, they provide the web interface to make things and also use of an API for generation in your own app interface. APIs let us all get more creative.

The Photoshop plugin from helpful Christian Cantrell is free (skip the other $89 one) and now supports in-painting and image2image. You need to add in the API key (which you ARE paying for) from Dreamstudio to use it, because Dreamstudio provides the server image generation side.

✨ You want free? And can handle some code tools? Then there is the complete Stable Diffusion Web UI from Automatic1111. They just keep plugging in the new functionality, it’s amazing. I highly recommend the detailed feature list with the pictures. And the custom scripts page is “wow,” too. This is free, but using it all means you code a little and can stand the generation wait-time depending on your platform. You can use a colab notebook, or install on hardware. The UI is built with Gradio.

You’re Into Those Latent / Interpolation Videos…

Deforum Stable Diffusion, a notebook environment that has added animation functionality (generate images, turn them into a movie). You may remember this kind of thing from Disco Diffusion. You can try the animation creation for a limited number of free attempts at Replicate.

Stable Diffusion Videos, code from nateraw, to make videos of interpolation between prompts in latent space.

Want to travel the latent space in Keras? As of this morning, there’s code/tutorial for it.

You’re Into Tuning “Objects” and “Styles” in SD

Textual Inversion for Stable Diffusion — there are now over 588 public community generated concepts shared on Huggingface. You can train an object or a style. I’m entertained that “Midjourney Style” is one of the styles, and let’s think about how meta that is? Max Woolf had a lot of success with Ugly Sonic, it seems.

That related Google paper I mentioned last month, “Dreambooth,” has been re-implemented and hooked up with SD as the model. It’s called “Dreambooth for Stable Diffusion,” work by Shivam Shrirao. It’s also in the HF diffusers codebase and has a community examples page too.

For both my own “Dreambooth” efforts and with the textual inversion concepts on HF, I am a bit flummoxed. I struggle to get both the object and the scene I’m describing to appear and integrate. I can get nowhere near the level of rowdy, bearded bro success exhibited in the video from Corridor Crew.

You’re into Searching/Browsing AI Art… And Datasets

Lexica search engine for Stable Diffusion prompts and images, will also work with real images to get you generated images that are similar. There is also now an API although it only returns 50 hits for a search. Oddly, they have raised seed money, but cool?

Krea AI’s CLIP-based prompt search code is pretty cool (semantic search using CLIP-ONNX, knn, autofaiss). They also make available their open-prompts dataset of 10 million prompt-image pairs.

Generrated, a dataset of DALLE-2 prompts and images.

You Want Prompt Help and Enjoy Text Gen, Videos, Stories…

Sharon Zhou published her pipeline code for doing GPT-3 + Stable Diffusion story generation at Long Stable Diffusion. There is a Stories by AI substack and podcast using these tricks.

Prompt Parrot, from Stephen Young, a notebook that uses GPT-2. A notebook to use “a list of your prompts, generate prompts in your style, and synthesize wonderful surreal images!” And a pre-trained GPT-2 model for prompt generation lives on HuggingFace already at MagicPrompt (thanks to Gustavosta, and there is a dataset too).

Fabian Stelzer is making interfaces to GPT-3 and diffusion generation in Google sheets. This is a cool one he’s calling HOLO Sheets. You need to plug in API keys (OpenAI and Replicate). Points for making UIs in whatever tool you can get working!

You Like 3D Stuff….

Tiles / textures: Dream Textures: Blender plugin from Carson Katri. That cool video clip of the orange mapped onto spheres from @Kdawg5000. If you want videos from Blender, here is micwalk’s blender-export-diffusion: Export blender camera animations to Deforum Diffusion notebook format. Here’s a tile material maker on Replicate from Tom Moore.

Text2Light: High res panorama generator that can be used in 3D, with editable lighting (thanks to Nicolas Barradeau for the link). I know what I’m doing next week.

Misc Hackery Fun!

⚡️ Performance-wise, François Chollet and Divam Gupta say the Keras version of Stable Diffusion is SOTA for speed: “~10s generation time per image (512x512) on default Colab GPU without drop in quality.”

🔎 Enhancing local detail by mosaicing, a script plugin by Pfaeff, for the stable diffusion web ui by Automatic1111.

🎨 Want to do in-painting with automatic object recognition instead of drawing? (“Computer, replace the tie with a bandana.”) Here’s a CLIP-Seg based plugin to use with the Automatic1111 web ui for stable from ThereforeGames.

🖼 Or want to do out-painting on an infinite canvas? Stable-diffusion-infinity from Lnyan.

There are new, bigger, open-source CLIP models from LAOIN/Stability: ViT-L/14, ViT-H/14 and ViT-g/14.

An article on fine tuning stable diffusion for the pokemon fans from Justin Pinkney at Lambda Labs.

A Few Apps and Articles

Uses of the new tech — it’s impossible to curate along with the tech links, I’m already going crazy and have work, too. 🧵I suggest you check out Daniel Eckler’s twitter thread of links to video examples.

👗A few random design explorations that I am enjoying: a friend is using trouser.ai for her imaginary ballgowns, and edgeofdreaming is doing amazing fantasy jewelry concept design (both on IG).

🏬 @Paultrillo is using DALLE-2 outpainting and runwayML to do animation videos of architecture modifications (IG).

🖼 If you liked @karenxcheng’s AR filter of paintings with AI-generated art, her explanation of how she did it is here. (They used OpenAI’s DALLE-2 for in- and out-painting. And it took 7 artists a month.)

🎥 Article about Fabian Steltzer’s sf movie in progress, using Midjourney. Love the pics.

🔬The Loab “AI cryptid” story (discovered by @supercomposite) is one of my favorite accounts of weird things surfacing in the latent space… And Chuck Tingle’s book Loab Finds Love (produced in like 48 hours?) is just a fantastic after-dinner digestif. Perfection.

Copyright news: "Artist receives first known US copyright registration for generative AI art | Ars Technica," for a graphic novel created in part with Midjourney. However, note there is obviously significant work “around the AI” in editing, writing, design, layout, etc.

⛏ @Advadnoun’s perspective on text2image haters: “It’s About the Jobs.”

Cutting Edge / In the Works / OMG Relax, Everyone

Video: Make A Video from Meta, a paper just released. The Energizer bunny Simon Willison has already investigated the training data used here (10 million preview video clips scraped from Shutterstock). (Also this morning: A good article this morning from Waxy on how the data collection for these models has been a bit shielded by research orgs.) Hacker god lucidrains is already on recreating it. Then we heard about Phenaki, in review, is also a Thing and can do longer videos in a kind of story format. Wow.

RunwayML has a button on their home page offering waitlist signup for Text2Video Early Access, btw.

3D: Good, fast results on 3d are all we need next! (Don’t @ me, I said good and fast.) And oh look, right after I typed that a few days ago — aka, AI months ago — we learned about Dreamfusion, a text-to-3d effort from Google. No code etc, and may not be fast, but someone will take care of that. Meanwhile, the code for Get3D has been released.

👂Audio: And then we also got AudioGen, generating audio clips from text descriptions like “a woman crying alone while eating salad.” Samples here.

ProcGen Art Experiences

A Number from the Ghost, an abstract meditative experience with colors and sound.

Night Drive from James Stanley, via hrbrmstr’s substack newsletter. Wow, the minimalism, let’s get back to that.

Toxi’s rasterize-blend demo is entrancing and relaxing.

Theatre.js for javascript animation design with three.js.

These Pages Fall Like Ash, a mixed media AR/print experience, by Tom Abba and Duncan Speakman and some famous guest writers. “These Pages Fall Like Ash is an immersive story told across time, place and the pages of two books. By leaving your home and exploring the streets and places around you, you bring books and story together to create an experience that is unique to you but also shared with thousands of others.”

I don’t really like embedding tweets, but this is so cute:

NLP & Narrative Tools/Papers

SpaCy’s experimental coreference component! Very exciting. I have a lot of experience labeling coreference relations by hand 🙃. More on this in future months, I hope.

I played with Rubrix for some big-data NLP last month, enjoying some of the elastic-search-powered weak supervision functionality.

Scikit-learn: The sleep-deprived but still productive Vincent Warmerdam released embetter, which implements scikit-learn compatible embeddings for computer vision and text pipelines.

OpenAI’s open-sourced Whisper is amazing at speech recognition. I can’t wait to try it on something significant.

📙 Greg Kennedy’s always excellent writeup of Nanogenmo, National Novel Generation Month. He’s been kind to include my increasingly data-scienc-y efforts the last few years, and he always finds gems in there that I didn’t look at hard enough. (I’m especially fond of the game-related ones.)

You can go talk to a digital character at Character.ai. They are careful to say that the characters are making stuff up, but I still went to look for a book Librarian Linda recommended. (It did not exist.)

“StoryTrans: Non-Parallel Story Author-Style Transfer with Discourse Representations and Content Enhancing,” a paper by Zhu et al on Chinese stories, and their method “learns style-independent discourse representations to capture the content information and enhances content preservation by explicitly incorporating style-specific content keywords.”

“Co-Writing Screenplays and Theatre Scripts with Language Models: An Evaluation by Industry Professionals,” a preprint by Mirowski et al. This is 102 pages long? It is another system that builds the story hierarchically: “By building structural context via prompt chaining, Dramatron can generate coherent scripts and screenplays complete with title, characters, story beats, location descriptions, and dialogue.”

“Every picture tells a story: Image-grounded controllable stylistic story generation,” a paper by Lovenia et al. Hmm.

Misc Data Vis and Data Science

What to Consider When Using Text In Data Visualization, a super article by Lisa Muth. (This is not visualization of textual data, but text as in annotations and labels on the charts.)

Svelte patterns from Reuters Graphics.

DuckDB keeps getting more interesting. Reading postgres tables directly in DuckDB.

NORMCONF! A conference that started from a an aggrieved snarcpost by data science wit Vicki Boykis, who is now making it real in December. I’m speaking. Still finalizing which of many projects to talk about that turned out more complicated than it sounded.

Games-Related Links

Jacob Garbe’s Authorial Leverage Framework for narrative game design. Really interesting, it discusses the tradeoffs you can make in tool design and production planning for authoring of branching narrative systems; specifically, relative to “the power a tool gives an author to define a quality interactive experience in line with their goals, relative to the tool’s [authoring] complexity.” (My paraphrase of his quote from Chen et al.) I’m mulling over and reading the dissertation and references when I get a moment. It seems there is also a book coming out on this general topic in November.

Loose Ends: A Mixed-Initiative Creative Interface for Playful Storytelling, by Max Kreminski et al. “Loose Ends specifically aims to provide computational support for managing multiple parallel plot threads and bringing these threads to satisfying conclusions—something that has proven difficult in past attempts to facilitate playful mixed-initiative storytelling.” The system tracks goals and actions using a domain specific logic programming language.

The Nethack Learning Environment Language Wrapper (github). This looks like a lot of fun.

“AI-Generated Games Are Starting To Appear On Steam (And It's Not Going Well),” article in Kotaku about a game made with Midjourney, which does not have textural-inversion object-permanence. The developers struggled with “how to generate images of the same person, yet in different poses/settings?” Meanwhile, of course fans are updating old MS-DOS pixel assets with AI tools (article in Ars Technica by Benj Edwards).

“The Dark Souls of Archaeology: Recording Elden Ring,” a paper by Florence Smith Nicholls and Michael Cook, which was awarded best paper at FDGconf. They recorded player messages and bloodstains, reading the game as a palimpsest of player tracks.

“Nvidia's new modding tools will add ray tracing to almost anything, even Morrowind,” says an article in PCGamer after Nvidia announced RTX Remix. “It lets you import game assets into the RTX Remix at the touch of the button (in compatible games) and converts the assets into commonly used USD (Universal Scene Description). This lets those assets easily be modified or replaced using apps like Unreal Engine, Blender, Nvidia's own Omniverse apps.” If I understand it all rightly, this tool might’ve made the following project easier.

Zelda Breath of the Wild map and StreetView images from nassimsoftware. I love this so much. Here is the “how it was made” video.

How to realize various actions in a one button game, a UX tour-de-button with gifs by ABA Games, via Salman Shurie who is in residence at Google Arts & Culture’s Creative Lab right now.

The Enduring Allure of Choose Your Own Adventure Games, a sweet historical article in The New Yorker by Leslie Jamison.

Related, Jason Shiga’s new cyoa comic Leviathan is being offered on Steam as an actual interactive by Andrew Plotkin.

Also related: IFComp (interactive fiction competition) for 2022 has just opened! They want diverse judges and players this year. Play a few games, leave some notes, be a judge!

Books

The highlight of the month was re-reading the first two Locked Tomb books by Tamsyn Muir, so I could read and love and be confused by 💀 Nona the Ninth. Banal, evil death cult leader who wants to be God, but awesome necromantic lesbian swashbuckling.

I made some Gideon and Harrow art in Midjourney while doing it. It seems like earlier models might be better at making a puppy with six legs.

TV

⭐️ A League of Their Own, Amazon Prime — this is as lovely as everyone says. Great period sports story with solid lesbian romance. Also, so good to see Abbi Jacobson “in person” again after watching (and loving) Disenchantment on Netflix.

Under the Banner of Heaven, Hulu — This was pretty grueling as a “true story” look into a fundamentalist Mormon cult murder, but very well done. I sure learned about LDS history. Content warning that should probably keep you far away: horrific animal murder story that traumatized me, brutal death of woman and child, profound repressive sexism and brutal control of women and girls.

I am watching the 💍 Rings of the Dragons 🐉 shows, but am a bit “meh” except for being clear I would never, ever, plan a wedding in Westeros. (Maybe unfair, I am enjoying pieces of both shows.)

Games

I am midway through 🤩 Immortality, the epic new film-clip game by Sam Barlow. It is compelling and cursed. I’m surprised by how much you can follow the plot development of 3 different films while navigating among clip pieces that include read-throughs, interviews, after-parties, staging scenes, etc. And then there’s the creeping of your skin when you start seeing the backstory. There are some UI issues with the clip management, but I kind of don’t care that much? This is a game about artists’ models, too.

The game has been covered by a lot of press, from game mags to mainstream journalism and movie press. Here’s a good sample article in Vulture if you want to learn more.

Playing Red Matter 2 in VR and loving it.

Poem: “The Artist’s Model, ca. 1912”

In 1886 I came apart-- I who had been Mme. Rivière, whole under flowing silk, had sat on the grass, naked, my body an unbroken invitation-- splintered into thousands of particles, a bright rock blasted to smithereens; even my orange skirt dissolved intro drops that were not orange. Now they are stacking me like a child's red and blue building blocks, splitting me down the middle, blackening half my face; they tell me the world has changed, haven't I heard, and give me a third eye, a rooster's beak. I ask for my singular name back, but they say in the future only my parts will be known, a gigantic pair of lips, a nipple, slick as candy, and that even those will disappear, white on white or black on black, and you will look for me in the air, in the absence of figure, in space, inside your head, where I started, your own work of art.

On the topic of bodies, try out “beautiful body” in your text2image tool and see what you get. Think about those biases and why it means what it means. In other beautiful body news, Bella Hadid had a dress spraypainted onto her as a fashion tech stunt. Is it the end of fashion design, the end of the garment industry? Do all bodies look good in sprayed on clothing? Discuss (maybe not with me).

I apologize for the length on this, including to myself. I’m thinking about breaking this up into two newsletters, one possibly paid (probably the one with the tech links). It’s just so much to keep up with right now!

All the best and hang in there,

Lynn / @arnicas

Fantastic edition. I don't know how you keep up with the firehose, but I'm glad you do

thank you ! great work keeping up with that madness these days.