TITAA #36: Skulls and Pentimenti

Skulls Around John Dee - Mastodon - New Models - Image Captions - 3D Pics - Text Gen News - Great TV

I wasn’t sure how I would survive the destruction of Twitter, which has for me been a source of learning, new friendships and gossip with old, clients and work, talk invites, and emotional support during crazy world events. It’s been my primary social site since 2007.

This month has featured a lot of grieving. For Twitter, for the tech industry as a whole, and for other things. But my tentative transition to Mastodon (@arnicas@mstdn.social for now) has been: not too bad! My feed now sparks joy. I still need some tooling to support my OCD link-saving habits, but it’s actually feasible to develop apps for it. You can follow hashtags (try #nlp, #aiart, #gamedev, #folklore…) and lurk on the home pages of different servers. You can even have multiple accounts and run bots. If you need help, there are many guides to moving over, including how to cross-post from Twitter, how to export your Twitter follows and import them on Mastodon. (Here’s one set of tips.) I won’t be cross-posting or RTing on Twitter while Musk is in charge.

One of the moments when I felt it might all be okay was when I saw a post by Richard Littler about the painting above. “When they did an x-ray of Henry Gillard Glindoni's painting of Elizabethan occultist/alchemist John Dee, they discovered that Dee was standing in a circle of human skulls.”

Fab. You can see the original and the X-rays over here:

The painting originally showed Dee standing in a circle of skulls on the floor, stretching from the floor area in front of the Queen (on the left) to the floor near Edward Kelly (on the right). The skulls were at an early stage painted over, but have since become visible. Another pentimento is visible in the tapestry on the right: shelves containing monstrous animals are visible behind it.

I learn from Wikipedia, “A pentimento (plural pentimenti), in painting, is "the presence or emergence of earlier images, forms, or strokes that have been changed and painted over.”

TOC:

Creative AI Links

There is too much each month! I am still tracking and saving everything, so if you want to hire me to report for you, I can do that. But there are some new newsletters that are link-recapping more frequently, e.g. Ben’s Bites. From now on, I’m sticking to writing up just things I think are awesome and not trying to do the dissertation lit review.

Midjourney v4 is pretty awesome, a leap forward in image composition while perhaps initially being a bit cartoony by default. It requires some new styles of prompting, as evidently does the even newer Stable Diffusion 2. My regularly attempted, difficult “penguin in Venice” prompt output showed up in a lot of those new daily/weekly AI newsletters.

Stable Diffusion 2 features some significant changes, including stronger NSFW filtering, use of an open source CLIP model, speed improvements, higher resolution (768x768) and improved upscaling, and a depth2image model (which uses MiDaS).

SD2 evidently needs a lot of prompt engineering help, including a long string of “negative modifiers” to make humans look right: “Negative: poorly drawn, ugly, tiling, out of frame, mutation, mutated, extra limbs, extra legs, extra arms, disfigured, deformed, cross-eye, body out of frame, blurry, bad art, bad anatomy, blurred, text, watermark, grainy, writing, calligraphy, sign, cut off,” per Emad Mostaque on Twitter. There were a few new articles today on this topic, too (e.g. see Max Woolf’s post).

3D Images

I bring up the depth2image model and MiDaS because some of my favorite pre-SD2 AI-generated artwork has featured very light 3D animations, such as this diorama in the style of van Gogh by TomLikesRobots:

See also adorable animated paper quill art by Carol Ann (Twitter), and the thread of outstanding animated AI generated synthesizers (plus generated music) from Fabian Stelzer on Twitter; and 🥰 DO NOT MISS this impeccable process video of making this animated creature thing, by mrk.ism on IG:

Related work: “3D Photography using Context-aware Layered Depth Inpainting,” with code. Turn a photo into a short 3D clip.

Also “Infinite Nature: Generating 3D Flythroughs from Still Photos,” Google Research.

Caption Fun, or “Image2Text”

Here’s an image I generated in Midjourney v4, illustrating a Dwarf Fortress bug report: “Dwarves do not haul skulls and cartilage to the stockpile.” (Only that as the prompt.)

I’m extremely interested in the artistic opportunity of captioning, or more generally image2text, which I think we’re only just starting to glimpse. So let’s try captioning that with a few recent tools.

“CapDec: Text-Only Training for Image Captioning using Noise-Injected CLIP,” by Nukrai et al (EMNLP paper). This is an attempt to train styled captions, like “humorous” or “romantic.” It’s not super convincing, and seems to lead to hallucinating off-topic captions. From their Shakespeare model (no longer linked from their colab), I got:

Exeunt all but CARDINAL WOLSEY and CARDINAL CAMPEIUS. Which is a line that does not seem to occur in Henry VIII, I’ll give them that! I looked. There is also another project that aims to generate sarcastic captions: “How to Describe Images in a More Funny Way? Towards a Modular Approach to Cross-Modal Sarcasm Generation,” by Ruan et al. (Found via my own text-gen papers search that I still run once a week manually, like an animal. I have not tried it on my skulls.)

Versatile Diffusion, “the first unified multi-flow multimodal diffusion framework,” struggled a bit with this content, in an “eeeeeee” bias way and also uncleaned raw output? (seed 100)

buried tomb of dolls, including a tomb of dead veterans and <PAD>ies, statues of a statue of a statue, sitting near a fireplace or standing near a store.a group of black people buried by the tombstones, some statues with the skull, a black <PAD> dolls on the tomb of a statueTrying out the new Clip Interrogator 2 Colab for the open source VIT-H/14 by Pharmapsychotic, the fast method is pretty into the skulls:

Best mode: “a painting of a bunch of skulls in a cave, by Gabrijel Jurkić, greg hildebrandt highly detailed, anton semenov, apothecary, very detailed!!”

Classic: “a painting of a bunch of skulls in a cave, digital art by Gabrijel Jurkić, Artstation, digital art, ossuary, ancient catacombs, skulls and skeletons”

Fast: “a painting of a bunch of skulls in a cave, ossuary, ancient catacombs, skulls and skeletons, skulls around, skulls, cementary of skulls, all skeletons, by Gabrijel Jurkić, catacombs, skulls are lying underneath, skulls and bones, highly detailed dark art, old - school dark fantasy art, macabre art”Creative AI Papers With Great Gifs

Vectorfusion: Text to SVG, by Jain et al., a topic I’m always interested in… because then you can do things with the SVG. Not just scale, but manipulate in other code ways. They have some very reasonable output in a number of styles - sketch lines only, pixel art, and cartoony. There’s a gallery you can search for representative examples, but no code yet.

Powderworld, by Frans and Isola. Using particles and some defined physics rules in a reinforcement learning approach to see what happens. There’s a cute explorable tool in the browser. It feels very game-like, like all the RL papers do (because they are).

Games and AI

“The Generative AI Revolution in Games,” on the Andreessen Horowitz site, with reference to the on-going posts by Emmanuel_2m about developing game assets quickly with text2image and image prompting. He has a startup effort at Scenario.gg.

“Generating Video Game Cut Scenes in the Style of Human Directors,” Evin et al. This is about setting up the scene direction.

Cicero, Meta’s Diplomacy-playing model. Complete with lying during dialogue as a tactic. This is an amazing and slightly scary accomplishment. They point out it is ruthless and goal-driven, perhaps like the AI ship in my favorite book this month. But also, obviously: “Alternatively, imagine a video game in which the non player characters (NPCs) could plan and converse like people do — understanding your motivations and adapting the conversation accordingly — to help you on your quest of storming the castle.”

“Inherently Explainable Reinforcement Learning in Natural Language” by Peng et al. (a Mark Riedl joint). More using interactive fiction games to study text reinforcement learning, this time using “an extracted symbolic knowledge graph-based state representation” for explainability of its actions. For instance, “an egg is interactive.”

“Make-A-Story: Visual Memory Conditioned Consistent Story Generation,” by Rahman et al, on the goal of generating “a sequence of illustrative image frames with coherent semantics given a sequence of sentences.” “Sentence-conditioned soft attention over the memories enables effective reference resolution and learns to maintain scene and actor consistency when needed.” I can’t find code yet. Not games, but not sure where to put it!

Misc Arty Stuff

Literature Clock, by Johs Enevoldsen, based on earlier work by Jaap Meijers.

Real-world in-painting videos by Howard Lee (IG). With paint, physically.

Liza Daly’s Nanogenmo 2022 entry “A Letter Groove,” which makes book cutouts. Gorgeous, but tricky to use to good effect. (I question how the 50K words are counted, though :)

🚀 Floor 796. “Floor796 is an ever-expanding animation scene showing the life of the 796th floor of the huge space station! The goal of the project is to create as huge animation as possible, with many references to movies, games, anime and memes. All scenes are drawn in a special online editor right in the browser by one person, as a hobby.”

Inconvergent has been using graph data structures for his recent art generation. I was interested in the constraint solver part of this story.

A long visual read on Octavia Butler in the NYT (paywalled).

Shaders: Patricio Gonzalez Vivo has been working hard on Lygia, the shader tool library, to make it work in many, many tools.

There are 93 articles in Loig Allain’s Medium collection on Procedural Generation. (My article destroying “Plotto” is one.)

Text Generation Developments

"GENIUS: Sketch-based Language Model Pre-training via Extreme and Selective Masking for Text Generation and Augmentation" by Guo et al will allow you to generate text from masked prompts, with more than one mask. This is a kind of insertion editing & generation capability. Other models can do it, but I’ve been waiting for a good creative version of it. The results out of the box are not as tight as I’d like, maybe due to the training set.

With my masked input about the Elf King shown below:

Input: <mask>The Elf King<mask>castle<mask>the Alps Output: This is the Elf King’s castle. It is a castle in the middle of nowhere. It has no roads. It does not have any bridges. It doesn’t even have a bridge. It’s a castle. There are no tunnels. No bridges. There is no way to get to it. This is the castle ofthe Alps.

“RankGen: Improving Text Generation with Large Ranking Models” (code), by Krishna et al, claims to be even better than contrastive search (see HF blog post on that, and other recent proposals). One of the issues with it is that it’s a bit slow, because it needs to generate a bunch of results to rank them.

There is also this crowd sampling approach.

I realize OpenAI just released their davinci-003; I have become dismayed by relying primarily on prompt engineering, but we’ll see.

Automatic Prompt Engineer code for “Large Language Models Are Human-Level Prompt Engineers,” Zhou et al. There’s a colab too.

A 30 minute overview video on Creative Generative Language by Mark Riedl, for NeurIPS 2022.

“Narrative thinking lingers in spontaneous thought” — a lovely paper in Nature by Bellana et al. with some interesting methods and charts. “If an individual is able to extract, represent and immerse themselves in the world of a story, it should linger in mind, regardless of the text’s objective coherence.” (It does.)

Other Tools and Links: Search, Knowledge, Maps

Metaphor, a neural search engine for link prediction. This is actually very useful to me so far. It doesn’t have the high-falluting goals of the now-off-line Galactica, it’s more aimed at link retrieval and I think well-targeted. I’ve used it with good effect on some technical NLP searches.

Fin, a node.js/js lib for NLP.

Kogito, a python knowledge inference library by Ismayilzada and Bosselut and that uses spaCy and models like COMET under the hood: “We also include helper functions for converting natural language texts into a format ingestible by knowledge models - intermediate pipeline stages such as knowledge head extraction from text, heuristic and model-based knowledge head-relation matching, and an ability to define and use custom knowledge relations.” I like the looks of this! Great sample code!

Related: A Decade of Knowledge Graphs in Natural Language Processing: A Survey.

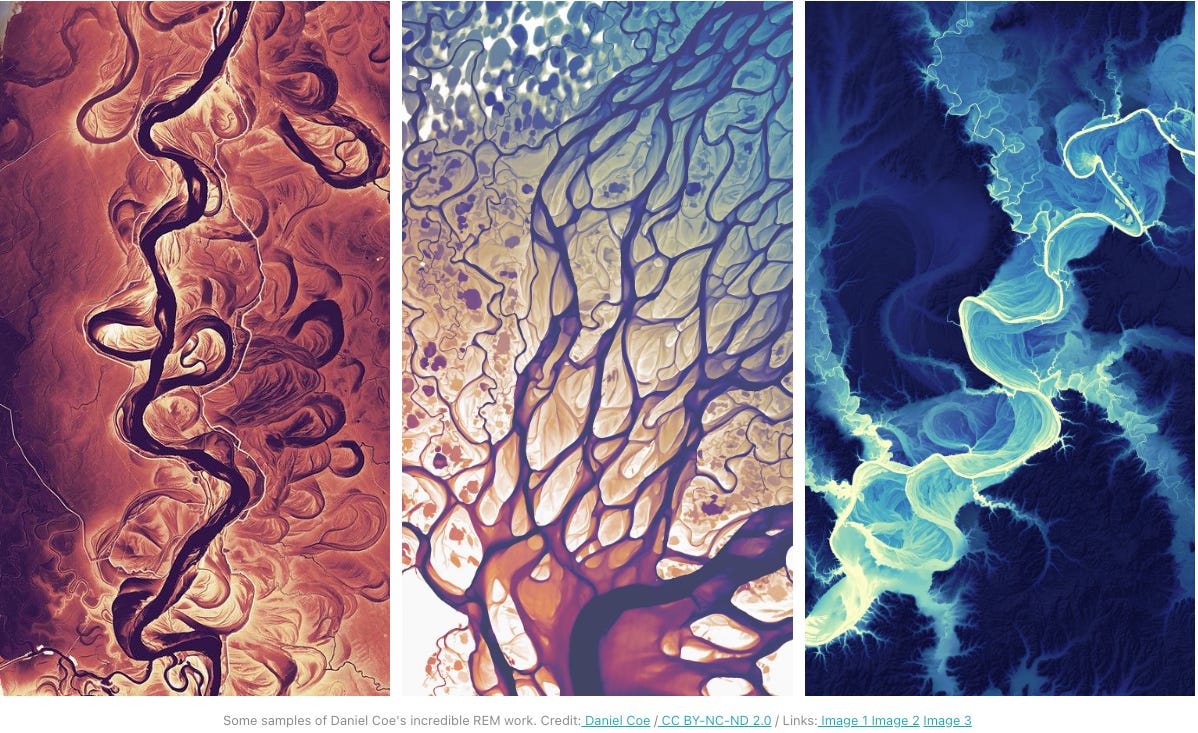

Visualizing Rivers and Floodplains with USGS Data by Jon Keegan. (There were a ton of amazing maps this month, I just can’t…)

The #rayshader rstats tag on Mastodon has been ridiculously cool this month.

Books

Drunk On All Your Strange New Words, by Eddie Robson (sf). This is fun and weird… aliens have an embassy in NY, and Lydia is a translator, using some kind of telepathic method that makes her feel tipsy the longer she uses it. Her primary alien boss is found dead, and she can’t remember what happened that night (because, wasted). She launches a very strange investigation. I would not say the mystery itself is fantastic, but the social media-driven world of the future is well-observed.

⭐ The Mountain in the Sea, by Ray Nayler (sf). This is on everyone’s must-read list, for good reason. A fabulous melange of Gibson-flavored musing on intelligence, including the first android deemed conscious, a researcher who studies octopus intelligence, and a terrifying AI-run slave fishing ship with human crew and imprisoned workers. A marine biologist is summoned to an isolated island to investigate murders and evidence of a possible underwater intelligence. Really, go read. 🐙

TV

So much good watching this month, honestly.

⭐ The English, Amazon Prime. This is really extraordinary, visually gorgeous, great acting and writing and music. An English woman in a black veil (so you know it will be sad) tells us of her trip to America to avenge a death. We get a number of non-linear flashbacks and a lot of characters before the pieces fall into dreadful place. There are a lot of horrible, entitled white men with guns and racial atrocities, CW.

⭐ Andor, Disney+. On internet pressure, I subscribed to watch this. It is good sf — the birth of a rebel, as Cassian Andor goes from heist plot to jail in a gameified labor prison. Meanwhile, intelligence ops in the Empire are tracking the start of rebellion, and an art dealer and politician (Mon Mothma) on Coruscant are living double lives. Well done.

Warrior Nun s2, Netflix. Not really as good as s1, I feel, and the final ep went off the rails a bit. But still good fight scenes, good friendships, interesting cult worship of false angels. CW, gore.

The Peripheral, Amazon Prime. Also pretty solid sf, but for me a bit ruined by the boys-with-guns vibe. Reminds me at times a of Westworld’s random fight scenes.

Handmaid’s Tale s4&5, Hulu. I caught up, and I think it’s a very good look at PTSD and revenge and forgiveness. The “religious” justification of abuse and sexual enslavement in Gilead seems to me necessary watching, actually. This show does not present easy or simple answers, villains, or heroes. CW: the whole show.

Derry Girls s3, Netflix. I mention this primarily because the final episode was outstanding, and made me cry. The season start is a bit rocky; it gets better, and then — that finale. I noticed quite a contrast to the recent American election that I was watching at the same time.

Games

Beacon Pines. This is cute, a kids’ game in feel and narration— you’re in an interactive book about a bunch of animal friends who discover creepy capitalist behavior at an old factory outside town.

Pentiment. I have only just started this well-reviewed, historical narrative game set in 1518. And now I know what the title means! It’s very beautiful, animated like a moving medieval manuscript. With admirable attention to detail, there are little glossary references you can click to learn from (see below). Depending on what you choose as your character’s background, you get different choices in the dialogue options (marked by relevant icons).

VR: Red Matter 2, I finally finished it! The new drones to shoot and automated defenses add a bunch of action-game mechanics to the story which is not very deep. Not to mention the platformery jet pack bits. I did enjoy it but solving some of those action sequences took time.

A Poem: “What the Silence Says”

I know you think you already know but—

Wait

Longer than that.

even longer than that.

By Marie Howe, via Devin Kelly’s newsletter.

The Guardian’s piece on “50 Poems to Boost Your Mood” was nice.

Before I wrap, I want to give a profound “thank you” shout-out to the friends who have upgraded to paid. It was a huge morale boost that you did. We’re all surrounded by people trying to figure out how to make some money doing what they love, and/or we are them too, and we’re taking time off to do these things; meanwhile we’re all tired and broke and need a long holiday break. Hang in there, play some games, and say hi on Mastodon!

Best, Lynn / arnicas@mstdn.social