TITAA #42.5: Old Haiku Meet New AI

A Multimedia Haiku Project - 3D Maps - Story Models - Typographic Art

This is a special mid-month update, where I finally get to show off an AI and culture project I made with colleague Christine Sugrue at Google Arts & Culture. We present 16 classic haiku poems illustrated with AI models from Google Research: with generated letter shapes, music, and video. The AI models in at least 2 cases do some things that open source models don’t yet do well. And Chris’s framing experience uses sweet three.js animations and UI easter eggs.

First, a word about the poems. I am very interested in illustrating existing poetry (e.g., see here in #28), and I like haiku for their natural imagery and emotional juxtapositions. As a teen, I was really into Zen Buddhism and read a lot of them. When I pitched a project in Google Arts & Culture (with an animated gif and keynote mockup), I didn’t expect them to go for it, but they did! And luckily my more-talented-at-javascript friend Chris Sugrue also likes poetry.

With the help of the extensive digital library of the amazing Haiku Foundation, I searched for public domain translations to use, to avoid copyright concerns. I chose a mix of famous historic Japanese poets/poems and some more recent English work (of the 1920ies, again for copyright reasons). I was happy to discover Amy Lowell’s haiku and the work of Lewis Grandison Alexander, a Black poet from the Harlem Renaissance. I really love their work. Their haiku are funny and touching and modern in feel, even now. Alexander wrote about haiku here, in a brief emotive article:

“The real value of the Hokku [old name for haiku] is not in what is said but what is suggested. … The emotions [the author] expresses are too subtle for words, and can only be written in the spaces between the lines as in conversations there are thoughts which the conversants can never convey as they cannot be clothed in speech, being too subtle for words.”

Haiku—like all poetry?—are about the latent. In the creation of the illustrations — visuals, sound, video — I wanted to convey one universe of atmosphere and emotion that I found in these poems. Poems are multivalent, and the space of personal interpretation is large, but I hope this presentation resonates even for the centuries old texts in translation.

On each poem page, you’ll find a slow building of the text executed via tween animations, a generated video from Phenaki enhanced with open source nature sounds, and links to two key words. Each key word is illustrated by generated letters and generated music, which I’ll discuss below.

For the video content, I tried to generate a “two scene” moment for each, a setting and an event with transition. I used tricks like specifying “documentary style” or even “black and white” for realistic nature scenes; color scenes especially with animals veered into fantastical or cartoony as you can see in the Google I/O pre-show performance by Dan Deacon. I still had some weird out-takes, like where the model doesn’t entirely understand cause and effect for Bashō’s famous frog splash, or we get a frog photo bomber despite the background frog:

Phenaki can manage transitions using multiple prompts, like “pan over a pond at sunset,” “zoom in on a willow tree blowing in the wind at night, stars falling.” Or “a thunderstorm with lightning” followed by “poppies blowing in a field in the wind.” The open source models don’t seem to do this yet, and people have to construct their videos as separate gif-like animations strung together. The videos I used weren’t edited apart from upscaling and adding some noise filters.

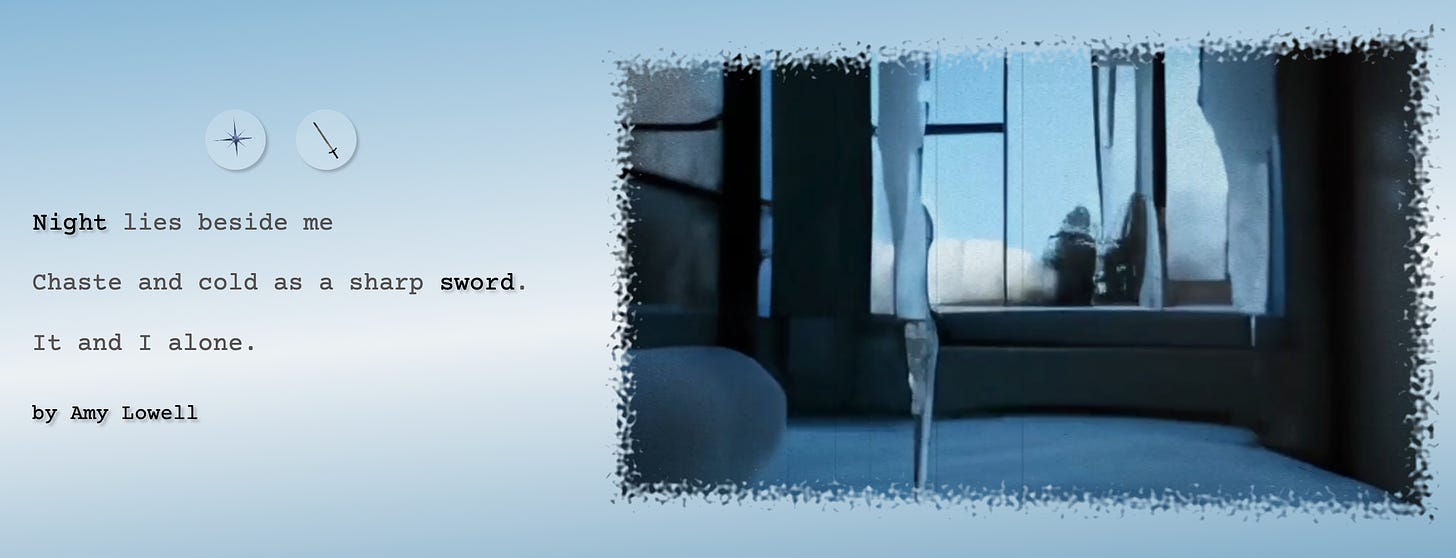

Generation for design requires a lot of curation, of course, and the famous morning glory-covered well haiku by Buddhist nun Chiyo-ni was tough to get. A lack of footage of wells, maybe? For some, my videos had to become more metaphoric, like for Amy Lowell’s beautiful poem below. Attempts at actual swords rendered cartoon ninja arms!

If you click on either the animated words or the round button links over the poems, you’re taken into a scene with animated AI-generated lettering. These were produced by prompting letter-by-letter in a research model. For instance, the word “sword” made with swords:

The prompt for these letters was something like, “A letter <x> made of swords on a white background.” (I cropped and removed the background by hand.) The model was good enough to be able to do “lowercase” as well, as you can see in “pond” — here I asked for more complicated contents, like “a lowercase letter n made of water and lily pads.”

I was sometimes charmed by the ability of the model to merge concepts, like these last-minute water-frogs I made because “water” letters were too boring—check out the froggy arm and eyes on the “w”! The prompt here was “lowercase letter <x> made out of water and frogs.”

No other model I’ve tested has been up to this task. This seems to be a kind of emergent ability that I’d love to see recreated in something accessible to more people! (And shoutout to Irina Blok in Google Research who discovered it.) I tried the new Deepfloyd If model (last newsletter) and new Midjourney 5.1 with these types of prompts and got results like these:

None of the other models understood “lowercase” and most got confused by the letter shapes. You can see that Midjourney confuses the T, F, and E shapes. This happened with all similar letter forms (C and G, etc). DF likes to make cute cartoon shapes, which I admit made me smile, but... not the right feel for many projects. MJ tends to make “filled in letter shapes,” a bit more like Adobe Firefly’s font generator which I covered in issue #41. I certainly made a lot of “filled in shapes” for the project too, but they are generally less interesting than the object-based letter design. I especially recommend my letters for the morning glories, the wet well bucket vine letters, the “cemetery” bones, the irises, “sing” made of songbirds, the fireflies, the willows. Among the filled in letter forms, the “moon” one is very nice (check the apostrophe!), as is “earth”. “Dream,” illustrating the famous death poem by poetess Omē Shūshiki, has an AI joke in it.

These typographic art words are accompanied by MusicLM output, prompted to convey the period and mood of the poem and letters. I am fond of the dancey beat on “poppies” in the Amy Lowell poem. Prompts were along the lines of “a traditional flute playing a melancholy simple tune like a bird singing” or “a drumroll that sounds like thunder” or “percussion like rain” or “ambient moody piano music.” You can try MusicLM in the AI Test Kitchen app from Google now.

Chris Sugrue’s three.js UI framing and animations — including the easter eggs — do a lot of the work to carry the mood, too. She fought hard to keep the UI simple and un-cluttered, starting from the 4x4 poem icon grid (all AI-generated visuals). The 3D scene for each poem is pretty subtle, allowing you to move around a bit and zoom in on the letters. There are particle systems at work on several of the poems, for rain, snow, fireflies, petals. She added little easter egg interactions hidden under each poem — try clicking to make flowers grow, pond bubbles, rain puddles, or turn off the lights. (As we waited on legal approval, more eggs got laid…)

Due to proximity of Google I/O, we have only word-of-mouth PR; if you like the project, we’d love to see some shares! If you want to see more illustrated letters, my colleague Gael Hugo released a “guess the word” game at the same time, while Nicolas Barradeau made a funny game with world monuments covered in plastic dough and Caroline Buttet and Emmanuel Durgoni made a “which one is real, which is AI?” game using cultural asset images. If you like cyoa games, try my old-style AI-illustrated penguin travel mystery from last year, “Where’s Hopper?”

I hope the projects interest you and thanks for sticking with the long intro article!

Links below behind the paywall are a bit abbreviated for length, but some are bangers. This list includes a great SpaCy release plus Jupyter news, a new storywriting model, a paper on story style, some fun 3D and Blender links (not even nerf!), a talk by Allison Parrish, a game map, and some new games books. I hope you’ll consider becoming a paid supporter if you aren’t yet!