TITAA #60: Creative Gum and Rag

Creative RAG - Lost Poetry - Minecraft Gen - SAE Papers - Narrative Agents

TOC to another big issue (links on the web site):

Intro article — AI & Games NextLevel Conf, Tiny Notes

Games (Misc Links, Minecraft Gen Craze)

Narrative and Creativity Research (Lots o’ Links, Agentic Writing)

NLP, Data Science & Vis (subections: SAEs & Embeddings, Vis)

AI & Games NextLevel 2024: Tiny Notes

Last weekend I sent myself to London for the one-day AI & Games Next Level event organized by Mike Cook at King’s College. I posted live on Bluesky during it (starting here). Here are some short remarks in light of this newsletter’s interest in creative AI and some of my own projects. Everyone who spoke was interesting, I’m eliding for the sake of an already very long issue!

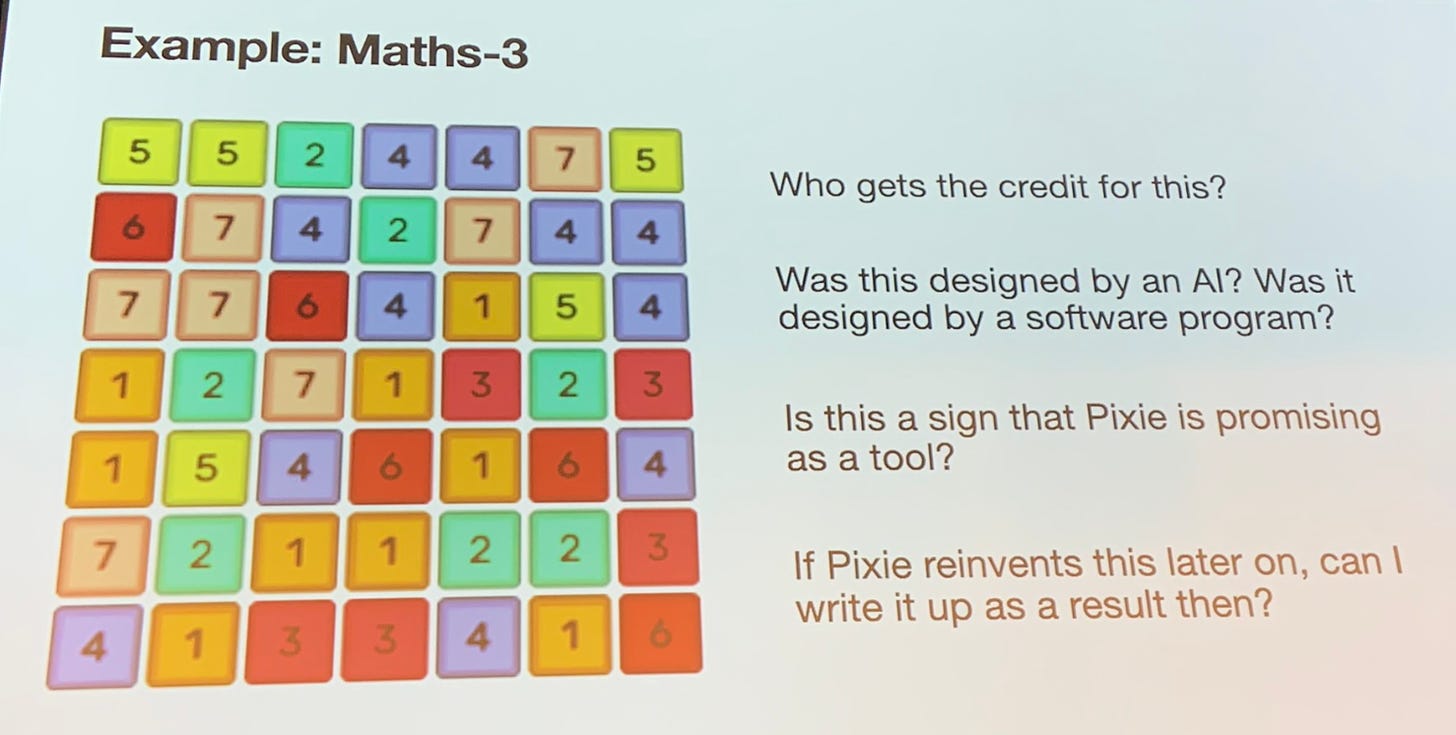

Mike Cook, whose articles I’ve shared before, discussed his research on game mechanic generation with his Pixie system. He noted that he laughed out loud at some of the ideas it came up with. This is almost a theorem to me now: if you made something that made something that made you laugh, you have made a Good Thing. Something, in any case: that gave you a wonderful surprise, confounding expectations? This delightful alien thinking is a way we learn about our own thinking patterns and implicit rule systems. He noted an authorial problem of “credit” in this context:

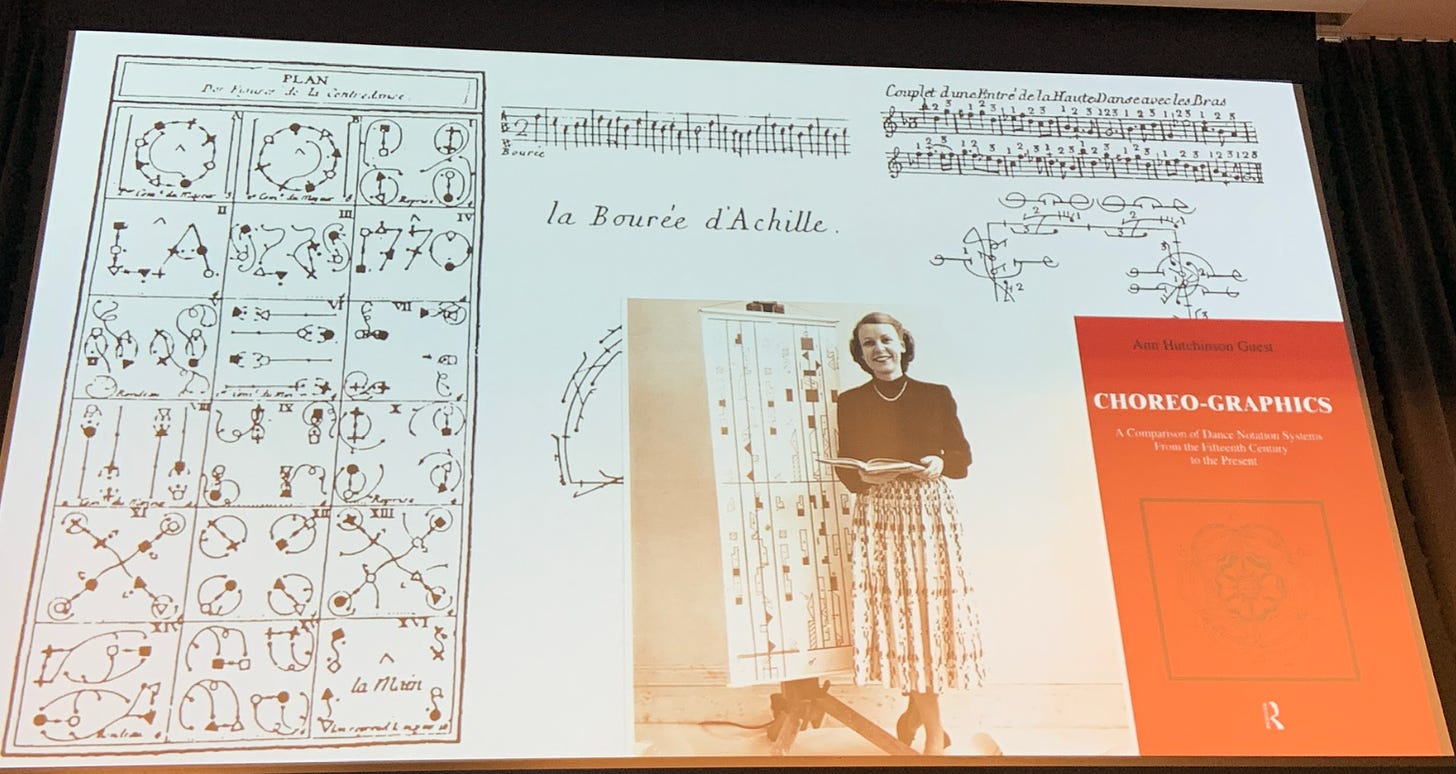

Kate Compton, aka GalaxyKate, of Tracery fame and more, gave a thought-provoking talk about ways to think about being creative with AI and procedural generation tools. She discussed ontologies as a way of structuring systems and domain specific notations as ways to talk about artistic goals — for instance, for dance or music performances:

And frameworks for writing:

She is interested in making better design tools, but also in helping people understand what they do when they work creatively. As many UX people have been saying, a text input to a language (or image gen) model isn’t an ideal interface for most deep craft work. What might be better? What are the units in which we want to work?

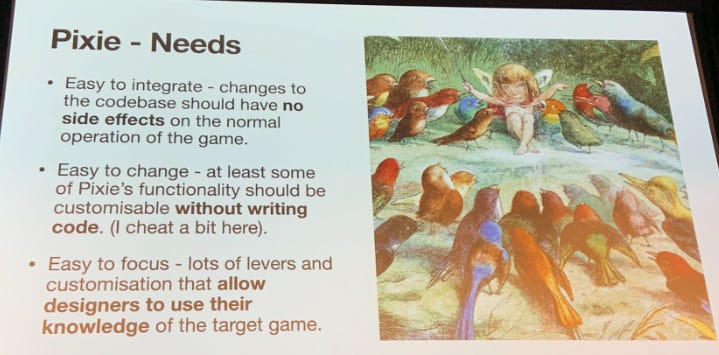

Mike’s Pixie design goals are closely related to this question:

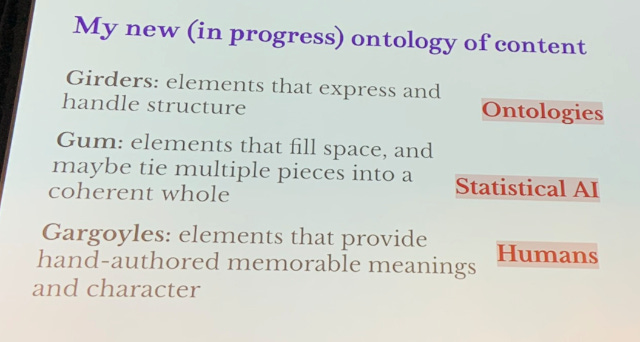

Kate suggests a framework: that we think in terms of combining rule systems or structural components with AI gen output, along with a human special touch — her 3Gs, “girders, gum, and gargoyles.”

She notes that LLMs are generally bad at generating fiction from a simple prompt (by most human measures of quality), but that given structure—girders—they do better. This is also the observation of many narrative AI research folks, who are using discourse structure components (plot, character, scene…) and levels of detail to weave together a better AI generated story. And it goes even better when humans are in the loop to add direction or curation. Sadly, narrative research folks often aren’t UX designers or tool builders. Nevertheless, I highly recommend the links to the latest research in my Narrative & Creativity section below — agentic systems are also becoming a Thing in this space, with multiple automated “writing experts” at work on different aspects of the fiction problem.

My own projects for the last few Nanogenmos (National Novel Generation Month, starting today 😃) have featured data sets and rules (often Tracery grammars) combined with gen AI “gum”: 4 years ago, my “Directions in Venice” used a GPT2 model trained on historical guides combined with Tracery walking directions (and flickr photos); and last year I used Google maps and location reviews in a game-like wrapper, rewritten by GPT4. (I wrote about it here.) My “gargoyle” component in the Google travel project was adding weird goals for each trip: ghost hunting, or industrial espionage.

I’m thinking of such systems as creative RAG: a bit like RAG (retrieval augmented generation) in which there is a background data set; we retrieve what we want from it, and then we create a new thing, in a generative context. Instead of generating a summary or a question answer, it’s a creative output: Creative RAG, or CRAG! Note I said “we,” not “it.”

My projects for Nanogenmo have all been spaghetti code at the end of the month — in part due to the difficulty of managing many levels of creative design in the “gum and girders” without good tooling for it. These types of little creativity systems are built iteratively: You need to move the levers a lot, and even add levers, after seeing what comes out. That’s also why it’s a “we” not just an “it” situation.

Mike Cook said at the end of his talk, “I don’t think the next step for creativity is a black box. I think we should teach people to build their own little AI systems to more deeply understand what they do.” We definitely need more domain specific tool building to enable this, with good UX, both for Kate’s “casual creators” (like me) and for professional creatives trying to use gen AI meaningfully.

Now the news!

Creative AI Models

Image Gen

I guess the big news of the last week is that the stealth image generation model “red panda” that was out-performing all others in the leaderboard (including Ideogram, Flux, and Midjourney), was unveiled as a model from upstart Recraft who are making “tools for designers.” It can do text rendering in images, styles, and even generate SVG.

I was pretty impressed by the SVG gen (Replicate link), it opened fine in Illustrator.

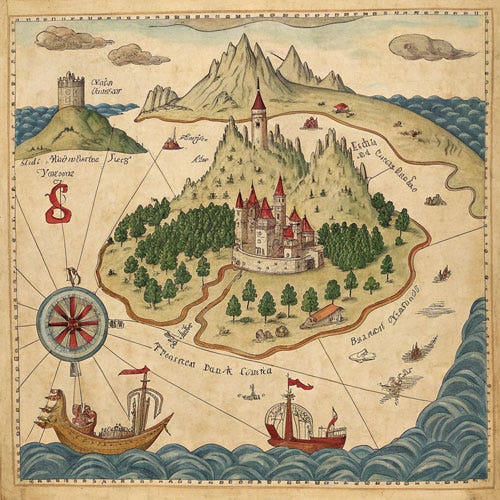

The non-SVG output for this prompt (model on Replicate) was also excellent, although geographically odd:

Compare Midjourney output options — less accurate to the prompt and arguably less cool, IMO.

It also did better at my usual attempt at a luxury space ship hit by a meteor with an explosion on impact (almost no model can interpret this prompt).

Blockade Labs just launced Blendbox, an Adobe-inspired image gen and editing tool that relies on layers, and a final “composite” in real-time of the image components. It’s clever and intelligent.

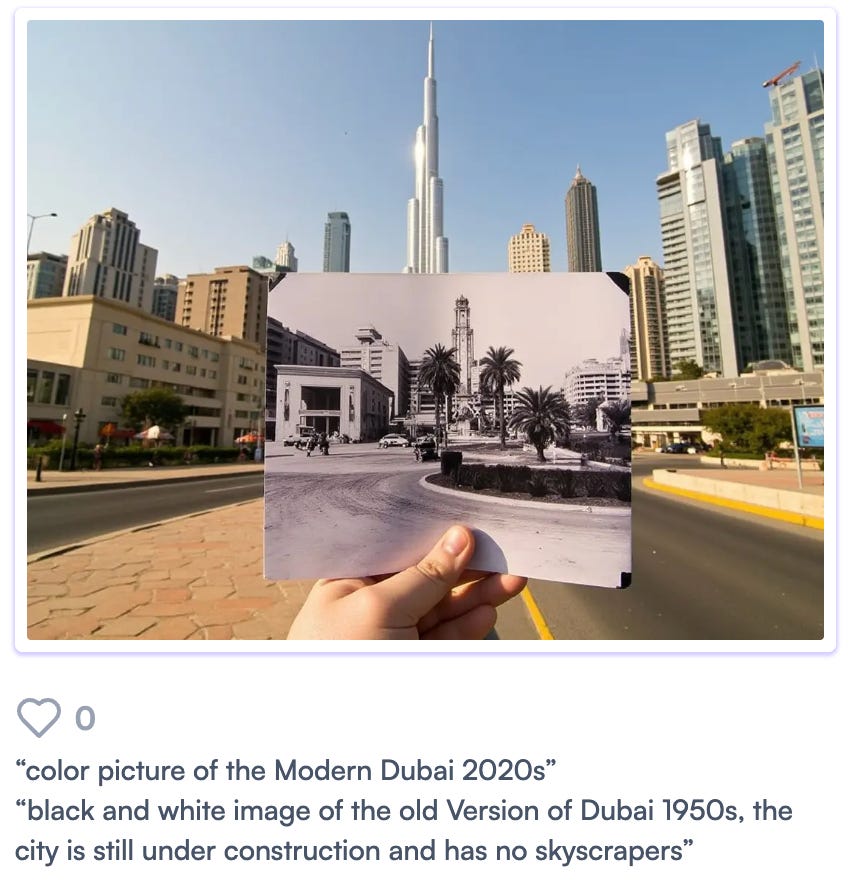

A good Lora on Glif — generate a “then and now” photo collage inset with 2 prompts:

Some 3D

OrangeSodahub/SceneCraft: [NeurIPS 2024] SceneCraft: Layout-Guided 3D Scene Generation — a cool generated rooms thing using 2D layout. (Via DreamingTulpa, I missed it last newsletter.)

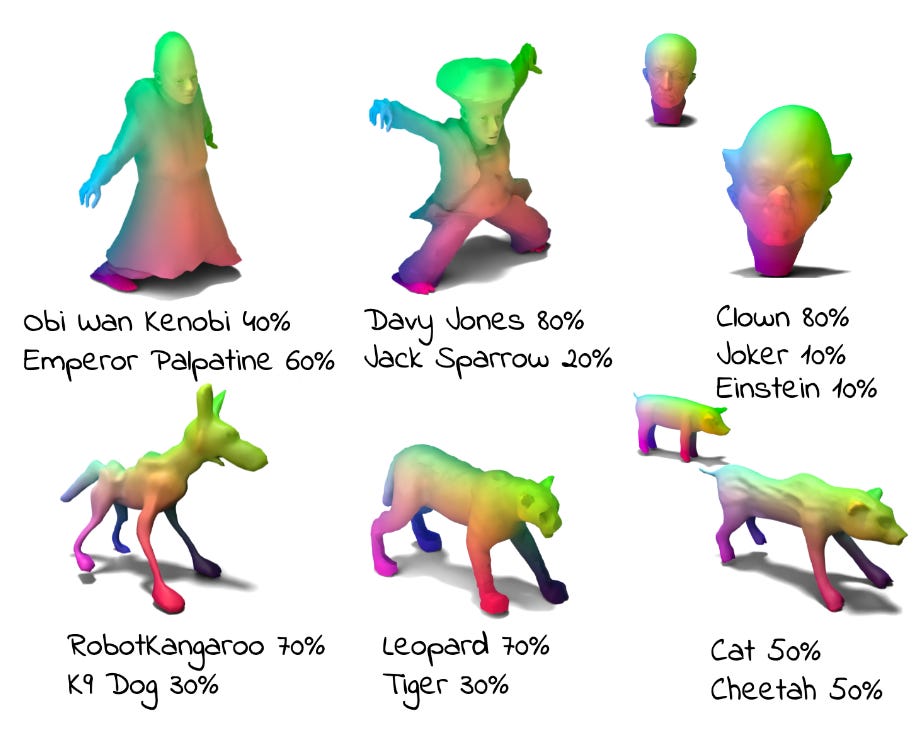

MeshUp: 3d deformation and concept mixing. This would normally be in my “weird” links for mid-month, but I am feeling low on AI links of interest to me right now (apart from the usual slew of model updates and comfyui wrappers etc). Also this was a candidate for illustration the new Jeff VanderMeer (seen in Recs).

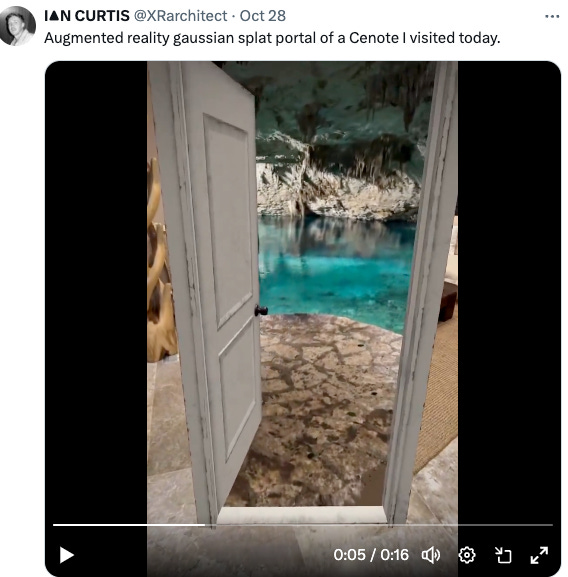

There’s been a ton of 3d gaussian splat awesomeness and a new file format to reduce size dramatically — but I just have to get this out the door. Email me if you want. (Ok, just this one:)

Misc

"This AI painter has sold $4 million in artwork. Now Sotheby’s wants a piece of the action" (FastCompany):

Botto is a “decentralized autonomous artist” that uses AI to create thousands of original digital artworks based on its own ideas, without needing a human prompt. It is managed by a 15,000-member community called BottoDAO, who vote on which works should be minted as NFTs on a weekly basis. These people—and their voting power—help shape the taste and perspective of Botto, who otherwise has full decision-making power for what it creates.

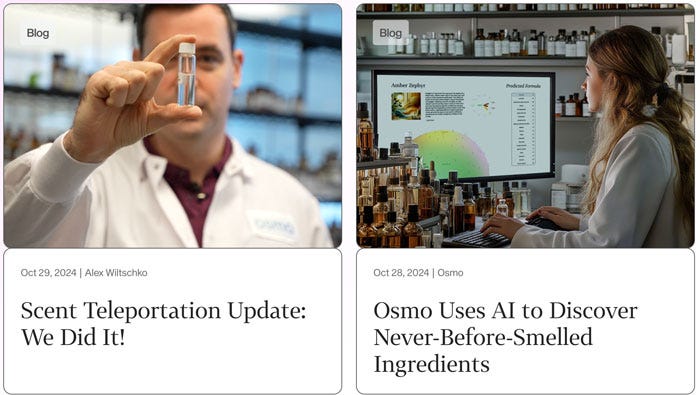

An AI perfume company, Osmo — achieving successes. (Spun out of Alphabet, I believe.)

facebookresearch/sam2: “The repository provides code for running inference with the Meta Segment Anything Model 2 (SAM 2), links for downloading the trained model checkpoints, and example notebooks that show how to use the model.”

Research: How Many Van Goghs Does It Take to Van Gogh? Finding the Imitation Threshold.

Video: There were lots of fun generated video tests and news/info in my mid-month newsletter, so not doing any video news here now.

Procgen / Web / Fun

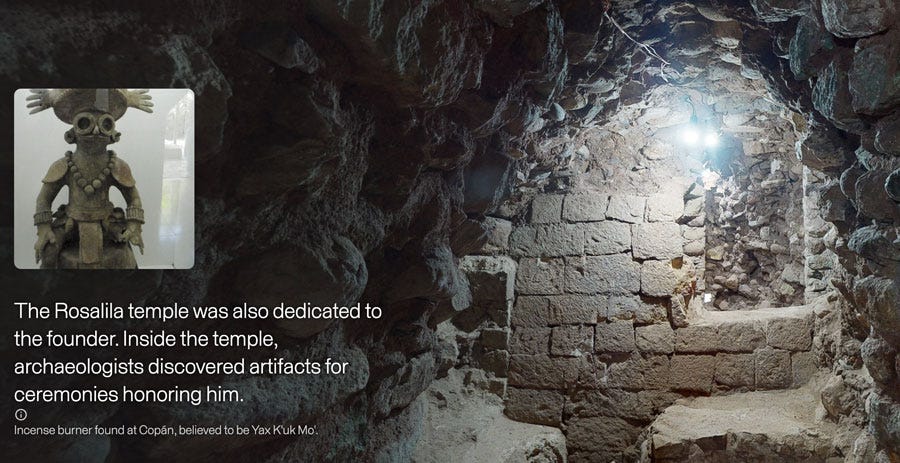

A 3D tour of the Mayan Temples at Copan (blog post), from Mused, who do 3d heritage site work. “Beneath the ancient stone temples and monumental stairway, there’s a hidden world—an extensive network of tunnels offers a glimpse into the city’s earliest history. The deeper and further into the temples you go, the older the surroundings, back to the founder in the 400s CE, 1,600 years ago.” A pretty interesting writeup, they battled huge spiders, 100% humidity, and a tunnel collapse during the 3d scanning. The stories and scans are here. It’s like scrollytelling Streetview inside monuments, what could be cooler (ok, VR).

Mathematical art by Hamid Naderi Yeganeh (IG link). It’s unbelievable.

"Bioart" - public domain bio / science art icons and images. One set:

⭐️ On Crafting Painterly Shaders - Maxime Heckel's Blog. Amazing post with embedded interactives and code, must-read for shader fans. (See also this guy Simon’s shaders and game courses.)

Artfully Arranged Junkyard Objects — via Kottke. Reminiscent of Jenny Odell’s satellite (and junkyard) work, there is a process video up by artist Cássio Vasconcellos.

Not awesome, but Lillian Schwartz passed away. "LILLIAN SCHWARTZ (1927–2024)" - a pioneer of generative computer art. Here’s a pdf of an oral history of her by the Computer Museum.

Project Neo (Beta) from Adobe 3D & Immersive Labs — the cool new stuff from Adobe Max was indeed very cool, but I still need to play with this!

Games News Links

An interesting old game archiving project, the Oujevipo Archive, with info on how to run them (h/t Tom Granger).

Roguelike Con’s talks are appearing on the YouTube channel!

A Thinky Games search engine now! Browse games.

Bitmagic, a 3d gen game environment tool/company, one of the Lightspeed “Game Changers” winners this year.

Special Issue on Large Language Models and Games | IEEE Transactions on Games" — call for papers, December 1st, 2024.

The ACL Wordplay Workshop, “When Language Meets Games,” which I’ve probably mentioned here before, but anyway have it again. One of the papers is below in the Narrative Research section.

"10 design lessons learned from 30 years of horror games" in GameDeveloper who did a lot on horror games this past few weeks. This points to a lot of good GDC talks/videos. Also has amusing counter-intuitive points, like “Disempower the player.”

"How to Build a Platformer with AI - Full Tutorial" - a Rosebud games post. I remain charmed by them. They also just released a new 3D gen template.

Minecraft Gen

The overnight news is the open-sourcing of a tech demo showing minecraft-like game gen on demand, Oasis. (Here’s a blog post too.) You can try to play in the browser but there is a queue to join. The quality is poor and there is no world model — if you turn around, the world changes entirely. You do not fall into the pits in the ground, you just keep floating over them. (Cocktail Peanut on X notes: “Instead of just moving forward, try keep rotating. You'll notice that your environment keeps changing, basically unplayable as a game. This is what I mean by "The Statement 'Every pixel will be generated, not rendered' is JUST A MEME".) In any case, as with the Counter Strike real-time “sim” and the Google GameNGen paper, these worlds won’t replace authored, reliable, real-time game worlds any time soon. Despite being tech feats of wonder.

Minecraft driven by AI has become a real thing recently — lots of people testing different models to build structures. Jack Clark on Import AI summarizes (links all to X, I could not find a good article otherwise):

Here's an eval where people ask AI systems to build something that encapsulates their personality; LLaMa 405b constructs "a massive fire pit with diamond walls. This is the only model that didn't just do a generic blob mixture of blocks".

Here's an experiment where people compared the mannerisms of Claude 3.5 Sonnet and Opus by seeing how they'd follow instructions in a Minecraft server: "Opus was a harmless goofball who often forgot to do anything in the game because of getting carried away roleplaying in chat," repligate (Janus) writes. "Sonnet, on the other hand, had no chill. The moment it was given a goal, it was locked in."

Here's someone getting Sonnet 3.5 to build them a mansion, noting the complexity of it almost crashed their PC.

Here's a compare and contrast on the creativity with which Claude 3.5 Sonnet and GPT-4o go about constructing a building in Minecraft. "Same prompt. Same everything," the author writes. "Minecraft evals are now real".

And a tool to try it out (apart from Mindcraft itself): Orchestrator to spin up MC server, run Mindcraft agent, save building and run eval.

Narrative / Creativity Research

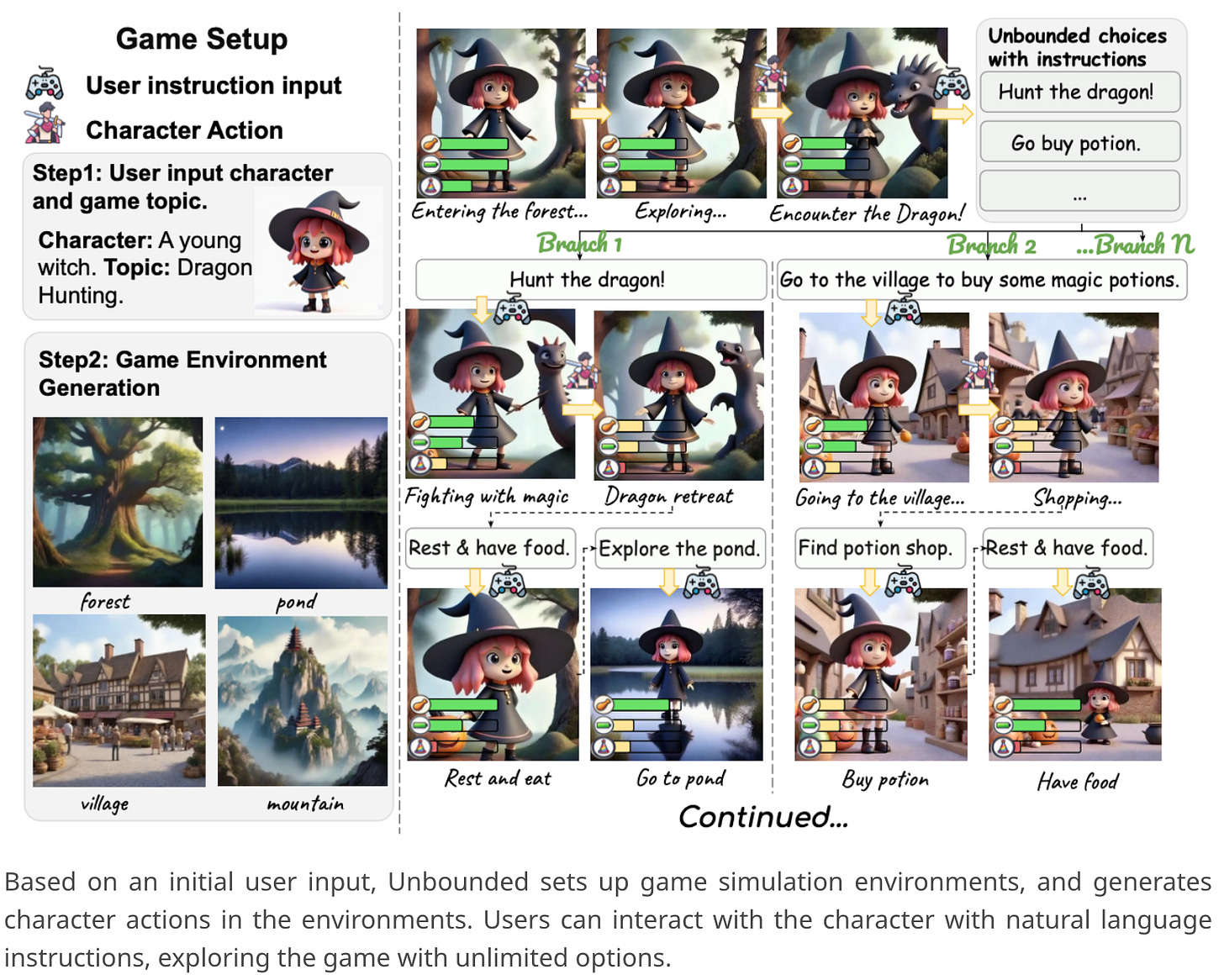

“Unbounded: A Generative Infinite Game of Character Life Simulation” from Google Deepmind (no code). Paper was just updated with more citations of relevant past work and discussion. This seems to be part of a DeepMind agenda to create game world simulations that we’ve seen mentioned on Xitter (by Demis and Nathan Ruiz). Among the main contributions in this work are environment and character consistency (also related to many comics gen approaches I’ve cited in this newsletter).

“We present: (1) a specialized, distilled large language model (LLM) that dynamically generates game mechanics, narratives, and character interactions in real-time, and (2) a new dynamic regional image prompt Adapter (IP-Adapter) for vision models that ensures consistent yet flexible visual generation of a character across multiple environments.”

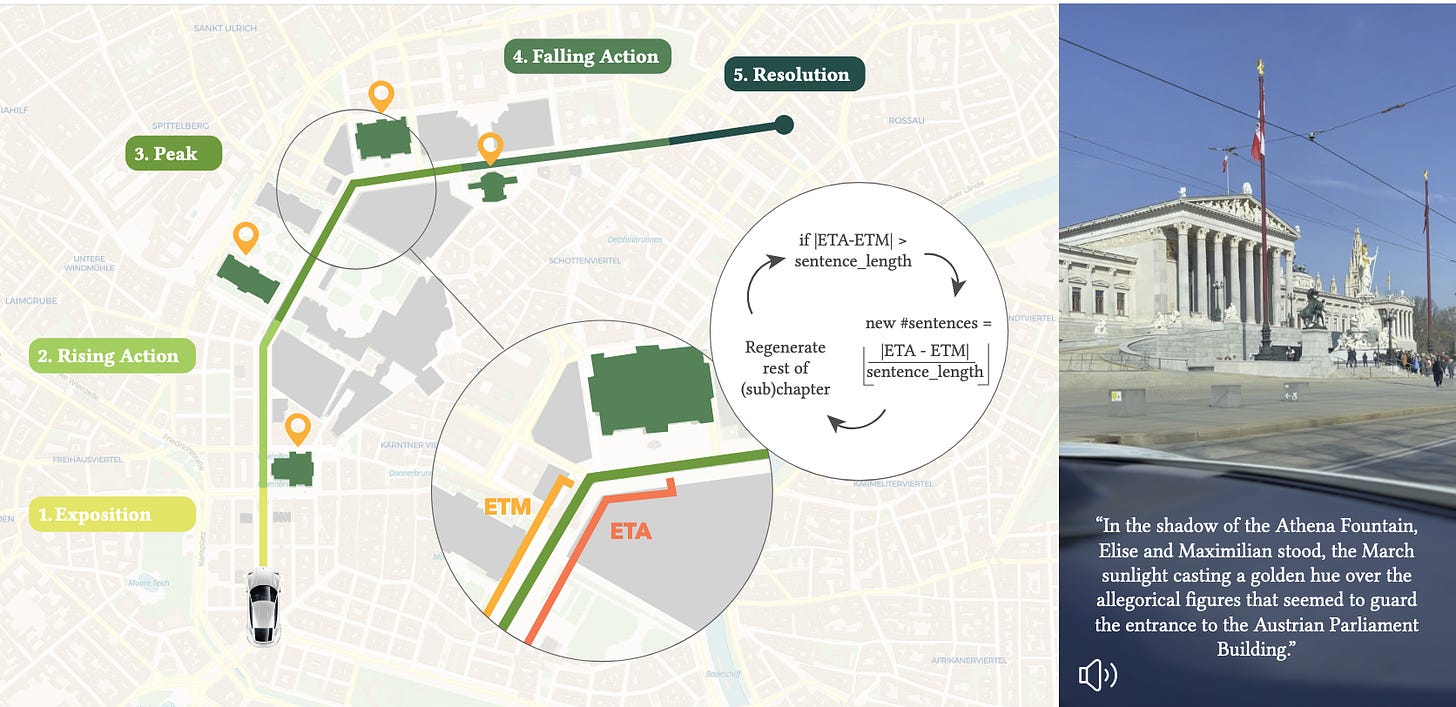

🚗 Story-Driven: Real-time Context-Synchronized Storytelling in Mobile Environments — or, synchronized story telling with driving environment! This is a great idea. I did not quite expect to see it using a narrative arc, I thought more environmental cues, but hey why not.

"SpecialGuestX" - via WebCurios mailing list. A lovely physical object manipulation for story making tool.

“DAGGER: Data Augmentation for Generative Gaming in Enriched Realms” — a paper at the Wordplay workshop linked in Games above; this is a “synthetically generated dataset for creating text adventure games from fiction and for generating prose from game states.” Shades of girders. They released two models: narrative-to-dagger, and dagger-to-narrative.

"Creativity in AI: Progresses and Challenges" - a recent look, a meta study. Finds that “[models] struggle with tasks that require creative problem-solving, abstract thinking and compositionality and their generations suffer from a lack of diversity, originality, long-range incoherence and hallucinations.” But some good review of issues including copyright and approaches informed by psychology. Also see Application of AI in Literature: A Study on Evolution of Stories and Novels.

"Does ChatGPT Have a Poetic Style?" - yes but it’s doggerel. My take. Anyway, also lol: “Our results show that GPT poetry is much more constrained and uniform than human poetry, showing a strong penchant for rhyme, quatrains (4-line stanzas), iambic meter, first-person plural perspectives (we, us, our), and specific vocabulary like "heart," "embrace," "echo," and "whisper.""

⭐️ Lost Poetry — Max Kreminski on computational poetry as “lost poetry” in contrast to “found poems.” Thought provoking, and even poetic. They also invoke Allison Parrish notion of "semantic space probes” going where humans wouldn’t ordinarily go. Good stuff!

I argue that many computational generators of poetry function essentially as poetry losers: machines whose central purpose is to arrange units of language, without fully understanding them, in combinations that can later be found to be poetry. …

Found poetry is poetry produced by an agent or process that did not intend to generate poetry. It consists of language generated toward some other end, creatively reframed as poetry by a later recipient. Lost poetry, then, is language with poetic potential, not originally intended by its creator for poetic interpretation, that may or may not later be found to be poetry.

BookWorm: A Dataset of Character Description and Analysis. “In this study, we explore the understanding of characters in full-length books, which contain complex narratives and numerous interacting characters. We define two tasks: character description, which generates a brief factual profile, and character analysis, which offers an in-depth interpretation, including character development, personality, and social context. We introduce the BookWorm dataset, pairing books from the Gutenberg Project with human-written descriptions and analyses.” There are also tests on character description retrieval with book length context. Nice!

BERTtime Stories: Investigating the Role of Story Data in Language pre-training. Using the TinyStories dataset, working on very small models: — “We find that, even with access to less than 100M words, the models are able to generate high-quality, original completions to a given story, and acquire substantial linguistic knowledge.”

Agentic Writing

Collective Critics for Creative Story Generation. This is an agentic approach: “… a group of LLM critics and one leader collaborate to incrementally refine drafts of plan and story throughout multiple rounds. Extensive human evaluation shows that the CritiCS can significantly enhance story creativity and reader engagement, while also maintaining narrative coherence. Furthermore, the design of the framework allows active participation from human writers in any role within the critique process, enabling interactive human-machine collaboration in story writing.”

And another one plus dataset: Agents Room. “We propose Agents' Room, a generation framework inspired by narrative theory, that decomposes narrative writing into subtasks tackled by specialized agents. To illustrate our method, we introduce Tell Me A Story, a high-quality dataset of complex writing prompts and human-written stories, and a novel evaluation framework designed specifically for assessing long narratives.”

CS 222: AI Agents and Simulations: A course plan from the famous Stanford (character sims) agents dude Joon Park. Sims fans take note.

NLP & Data Science & Data Vis

Github’s announcements of adding new coding models (notably Claude and Gemini Pro) were great news for Copilot fans who haven’t switched to Cursor yet 😛 (well I did), and their Spark UI dev tool sounds very promising. Also note the cool “Learning Sandbox” via Amelia Wattenberger. Nice!

🎤️ The (in)famous Notebook Llama release from Meta to counter the non-free NotebookLM from Google. And a new company offering podcast from documents: "Lettercast.ai - Turn your content into audible experiences.”

🧮 700 pages of Algorithms for Decision Making ebook from 2022.

Anthropic’s course files on using its LLMs, including evaluation and tool use (“agents”).

mangiucugna/json_repair: A python module to repair invalid JSON, commonly used to parse the output of LLMs -h/t Rohan Paul.

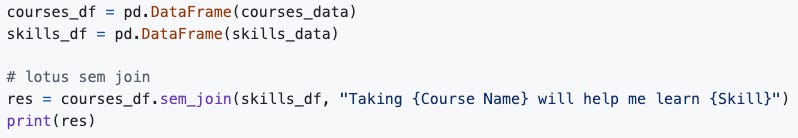

TAG-Research/lotus: LOTUS: The semantic query engine - process data with LLMs as easily as writing pandas code: I mean, what? Fascinating (LLM glue).

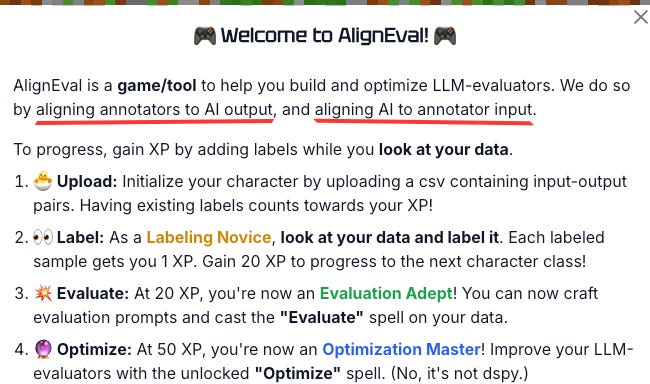

Align Eval: a game for labeling and alignment teaching. Cute.

SAEs & Embeddings

Literally do not have time to go into these, but for the 4 of you who are big fans of Sparse Autoencoders / embedding models like me:

"Automatically Interpreting Millions of Features in Large Language Models"

"MEXMA: Token-level objectives improve sentence representations"

Contextual Document Embeddings tool code from Jack Morris (for their small SOTA model here) — includes clustering tooling:

clustering large datasets and caching the clusters

packing clusters and sampling from them, even in distributed settings

on-the-fly filter for clusters based on a pretrained model

An interesting “embedding space vs color space” code notebook by someone who didn’t sign it, so.

Data Vis

Nomic is offering a preview of their new Data Stories — scrollytelling embeddable vis stories using their UMAPs. They have also added Leland McInnes as an advisor, which is a good idea. The hand of Ben Schmidt can be seen in the data stories implementation. 🤩 It will do zooming in on the big UMAP point display as wanted, plus toggle different states/display settings.

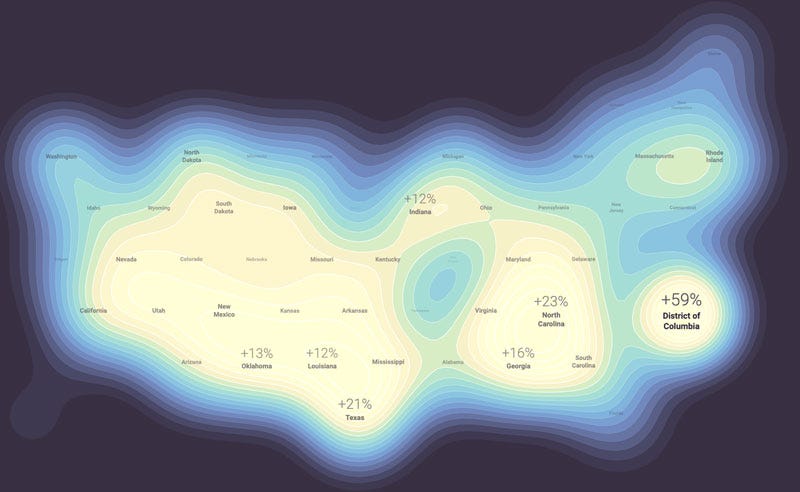

How We Made Waves of Interest — an observable data vis tutorial/writeup by Fil about the project with Google News and Moritz Stefaner. (Via Andy Kirk’s newsletter.) Fancy heat maps of search interest in election topics:

Book Recs

In the separate mailing for supporters, I had the TV recs too and much more detail here. I’m also leaving out the slightly more “meh” books from this list.

Oh this is news I forgot to report (h/t Pippa Brooks): "Universal International Studios Buys Matt Dinniman’s ‘Dungeon Crawler Carl’ With Seth MacFarlane’s Fuzzy Door & Chris Yost Attached". I love the books, but can’t imagine these in a non-animated form. Wow.

⭐️ The Cautious Traveller’s Guide to the Wastelands by Sarah Brooks (fantasy). This reminded me of the Southern Reach series (see below), but merged with Snowpiercer. I loved it. A train crosses a Siberian wasteland that is altered, biologically weird, similar to Area X but maybe also a faeryland.

❄️ Arkhangelsk by Elizabeth Bonesteel (sf). This was a lovely read, well-written and a bit melancholy, while still being lively SF. A colony on an ice planet, with a system of deliberate eugenics, combats external splinter group forays and confronts an unexpected space ship arrival.

🚀 Trading in Danger (Vatta’s War Book 1), Elizabeth Moon (sf). A top performing military academy student is kicked out for poor judgment, and her trader family send her to space with a ship that needs repairs.

🐇 Absolution by Jeff VanderMeer (sf/f/horror). The new 4th book of the 3 book Southern Reach trilogy 😛. Welp, for this to count as any explanation, you have to remember the characters in the previous books, which I did not.

A Poem

My neighbor’s daughter has created a city you cannot see on an island to which you cannot swim ruled by a noble princess and her athletic consort all the buildings are glass so that lies are impossible beneath the city they have buried certain words which can never be spoken again chiefly the word divorce which is eaten by maggots when it rains you hear chimes rabbits race through its suburbs the name of the city is one you can almost pronounce

As usual, if you got to this point, I adore you. I hope we all survive this election intact and sane.

Lynn (@arnicas on xitter which is increasingly disgusting, mastodon, bluesky and Threads, but mostly posting on Bluesky)

This is exactly the kind of cool and exciting llm technology news i love to read about.

Im on the verge of doing some of my own llm experimentation, to evaluate if there's a way to leverage them as part of narrative (video) game.