TITAA #43: AI Agents Mispronouncing Stuff

Krakens on Rails - Agents Arguing - Silos - Multimodal DFs - Zelda Love

I recently played through The Kraken Wakes, the AI/LLM augmented game adapted from the John Wyndham novel. I also read the book after playing. This intro bit is a review of the game and how the tech is used in it. There’s more on the book in the book recs section. There are some plot spoilers here, but only in outline form.

The gist of the story is that a bunch of meteors land in the oceans, clustered around “the deeps.” Ships start disappearing. Then something attacks islands. There is a sea-water level rise soon after. All of this is experienced and synthesized by reporters for the “EBC Post” in London and Cornwall. Wyndham has been called a “cosy catastrophe” writer and there’s even a Rose Cottage here!

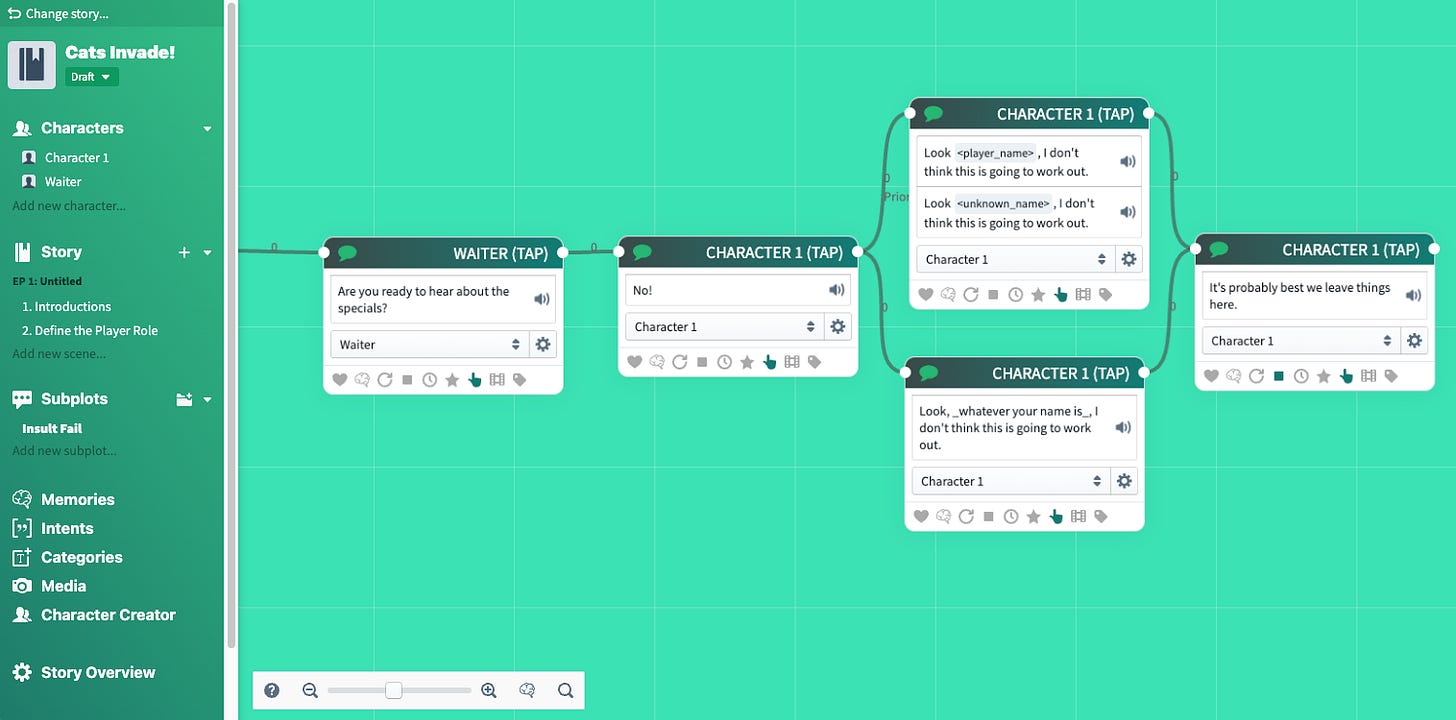

The game version serves as a tech demo of using Charisma.ai’s tools for authoring branching dialogue and game state trees with support from (but not replacement by) GPT responses. Their web browser editing tool kind of looks like a beefed up Twine, with memory, dialogue intents, character emotions, etc:

The tool can be used as an SDK for licensing in Unreal, Unity, etc. Kraken is an authored game, but the LLM is used as fallback for non-matching dialogue from the player. A recent blog post has some details on how the dialogue works with authoring plus fallback. This section on analytics after launch is especially interesting:

There’s an article up on their blog by Rianna Dearden about adapting the book. I’m struck by how very different the game feels compared to the book. But the book is composed of a lot of second hand reporting, in fact, so as is it wouldn’t make a very immersive game. In order to create more immediate action, new scenes and characters have been created, plus mini-game interactions (loading sandbags, press conferences, etc). While the book has a lot of reflective dialogue as Phyllis and Mike read the papers and listen to people in pubs, the game turns this active by making you a reporter working with them. You have to propose headlines and call people for quotes. There is a lot of text to “talk about” with your editor and colleagues.

Supporting this, there are a bunch of memory mechanisms on display, and a log book for your own “memory” to keep you on the rails. At several points you are asked to enter language recorded from an interview or conversation in order to progress. Here’s a screen shot of the UI — a 3D world, characters speaking to you, and you have a prompt at which to type (and the thing you’re responding to is repeated just over your prompt in case you need it):

My critiques of the game run from nitpicks to more substantive. Principally, I didn’t like the generated voice prosody (they used Replica, also used in the recent NVIDIA demo of which more below). There were some annoying ticks and mispronunciations, too. I had my caps lock on accidentally when I entered my character name, and I was called “EL WHY EN EN” through the entire game. Sometimes the wrong stress was used on words like “record” (noun vs. verb).

The 3D environment game mechanics were occasionally buggy: Sometimes interactions with an object failed. Once I was outside the office while an entire scene occurred with me unable to get into the room for some reason. These kinds of scenarios really display how “on the rails” the dialogue engine is. I was saying, “let me in, let me in” and the game dialogue just ignored it or offered sarcastic dismissals (of course; and very Wyndhamy).

Apropos some recent critique of chat interfaces: typing full strings is an inefficient way to navigate when it makes no difference to the game system. But I also feel the same way about dialogue menu options (e.g., Ink-style): if all the options funnel you down one path, why offer options? It’s simulated agency, which isn’t fun.

I did feel more supported than I do in parser-based text games, where often I have no trust in the author and hate the guessing game. I had more trust in open response handling. The game wants quite specific things in a certain order. For instance, you aren’t allowed to combine two speech actions in one line: “Hi, I’m LYNN from EBC, do you have any sandbags?” is not ok, you need to introduce yourself as one utterance and then ask for the sandbags. Dialogue feedback did get me on their tracks, though.

Hopefully the team is learning a lot from the logs, as they suggest, and from the writing process. I didn’t dislike it, and quite liked some of the atmosphere and added characters and writing. Pity about the speech synthesis, the weakest bit of the AI use, which the Kotaku NVIDIA NPC article also derided.

Anyway, note to self, play Dredge next, and note to everyone, read The Mountain in the Sea if you like AI and tentacle intelligences, it’s fantastic. For another example of a game-ified book (which I admit I haven’t played yet), there’s Orwell’s Animal Farm adaptation as a game by Nerial — here’s an article about it, and talk by Emily Short with lots of details about process and method.

Onto the news-fest! I’m more exhausted than usual by keeping up (tool and Twitter death? too much?) so I’m focusing more narrowly on things I’m into and on code-available things.

TOC (will be links on the Substack site):

Games News and Furor

Narrative Research (and one dude with 97 AI-books)

AI Art Tool News

Beta of Photoshop’s Generative Fill is out — in and outpainting, with a model not as good as Midjourney’s (especially for people and hands), but what is? It’s still useful and fun. Here’s a thread of Kody Young on Twitter trying outpainting on famous artworks.

Blockade Labs released their sketching input interface to skybox generation; it’s a bit tricky? and doesn’t replace a lack of understanding of scene composition text, IMO. They are also offering download of depth maps with the results, which is great (option on lower right corner of the gif). This is an example of trying to draw a yellow brick road in the woods — you can see it generated multiple roads, and not yellow; the green is what I drew.

Sketching video frames — Uses sketch input via Controlnet to control multi-frame generation for video. By Vignesh Rajmohan (with code/colab demo). Cute. Video Doodles is cute too, but not code yet (Emilie Yu et al). If you know the birds-with-arms things (LeopARTnik on IG) you will love this.

A Photographer and the “Alien Logic of AI” in the New Yorker. Charlie Engman using Midjourney. I love the leaning in to the surreal glitches.

TikTok Creators Use AI to ReWrite History (article). Alternate history fiction from ChatGPT and Midjourney by @what.if_ai.

ThreeStudio - code/colab for a unified 3d object generation framework, combining research code from, DreamFusion, Magic3D, SJC, Latent-NeRF and Fantasia3D. There’s been a bunch of new 3D gen stuff recently, most without code, and it’s exhausting… I’ll post more when there’s code.

🎥 Ok, just this one video generation link: In my mid-month newsletter about my Google Arts & Culture AI illustrated haikus (my excellent project is here), I mentioned that Google internal Phenaki does multi-scene videos. This paper is the first to show something of similar looking capability coming from other research: Gen-L-Video, code coming!

GlyphControl: One unpublished, new generation model mention. This new text rendering model that looks much better than DeepFloyd (I was told “API access” is coming, if not model? and they give an empty repo link).

Procgen, 3D, Other

Rooms.xyz, a startup for building cute 3d isometric models that are interactive. Co-created by Bruno Oliveira, a lovely former boss. You can remix the rooms and make little games!

Procedural Chinese Landscape Painting in Geometry Nodes Blender 3D, a post (after cool Twitter videos) by Shahriar Shahrabi. He worked on Puzzling Places, a VR 3D historic sites puzzle game on Quest that I really enjoy. This work is accidentally related—but 3D— to LingDong’s generative Chinese art (git repo). (H/T Alex Champandard.)

Some links in this post on using those new Google 3D map tiles in Three.js. It’s not straightforward and the caching part looks unpleasant.

Taper #10, low-fi text and visual poetry from Nick Montfort’s Bad Quarto Press. I thought Allison Parrish’s was especially sarcastic in a good way.

Here’s Kate Compton’s classic post on procedural generation and perceptual differences, aka, the 10000 bowls of oatmeal reference that Allison is using.

Relevant: Making Martian faces procedurally for a Playdate game, Mars After Midnight. (h/t Tom Granger). I love these. (More here.)

“Practical PCG Through Large Language Models” — 2D level design with constraints by Nasir and Togelius.

Agent Sims

Two cool Minecraft agent papers in a couple days:

MineDojo Voyager (code), a Minecraft agent that explores and learns better than any other Minecraft agents to date (except, stay tuned, the one below?). It’s a bit of work to set up. More code vacations, please.

Ghost in the Minecraft (GITM). This is efficient: “GITM does not need any GPUs and can be trained in 2 days using only a single CPU node with 32 CPU cores.” It also achieves the diamond task (67% of the time?) and a bunch of others. Code.

Other interesting agenty papers all in a bunch this week… it’s only heating up.

GPT agents bargaining (code). Watch GPT-4 vs. Claude argue about prices and features in this research from Yao Fu (Edinburgh). There’s also this one, “Strategic Reasoning with Language Models” by Gandhi et al with no code.

“Mindstorms,” Zhuge et al. (No code.) A philosophical experiment as well, they investigate what “natural language societies of minds” (NLSOMs) might accomplish. “To demonstrate the power of NLSOMs, we assemble and experiment with several of them (having up to 129 members), leveraging mindstorms in them to solve some practical AI tasks: visual question answering, image captioning, ….” These NLSOMs might have humans in them too.

“Training Socially Aligned Language Models in Simulated Human Society” by Liu et al. (code) “This work presents a novel training paradigm that permits LMs to learn from simulated social interactions.” There is a code repo here. “We directly train on the recorded interaction data in simulated social games. We find high-quality data + [a] reliable algorithm is the secret recipe for stable alignment learning.”

Game News & Furor

A few days ago, an NVIDIA demo made game dev Twitter irritated: see terror headline Nvidia's New AI Is Coming For Absolutely Every Gaming Job in Kotaku; and here’s the relevant demo clip on Twitter. The speech synthesis is Replica again, as in Kraken Wakes above. Via Liz England, here’s another video of an older but recent attempt to use live AI tools with an NPC selling hotdogs, which shows lag and the same bad intonation/pronunciation from Replica.

Earlier this month, there was a more balanced take in the Guardian, based on the “Stanford agents paper” that no one know how to refer to: “No more ‘I took an arrow to the knee’: could AI write super-intelligent video game characters?” (By Will McCurdy.) Pull quote:

Artem Koblov, creative director at indie developer Perelesoq Studios, has been actively trying to incorporate AI into his own company’s development process for some time, but wasn’t pleased with the results. “If an AI can predict your game’s script, then your game’s script is not good enough,” he says. “The text always somehow feels shallow. There’s no depth, no subtext, no nuances and insight. It seems to all be in place, but there is no soul in it … Writers put their soul into even small descriptive text, or ‘flavour text’,” he says, referring to the in-game item descriptions and books that add richness to virtual fantasy worlds. “These phrases can make the player unwittingly smile, and improve the overall impression and atmosphere of the game.”

As a followup, there was a Twitter thread asking for examples of good game writing yesterday, read here (thanks Cat Manning). My game recs often cover games with good writing, and some I’d recommend include Kentucky Route Zero, Pentiment, What Remains of Edith Finch, Citizen Sleeper, NORCO, Heaven’s Vault, Call of the Sea. I always notice the writing. But I also think world design is writing, and man, Zelda!

Zelda: Tears of the Kingdom was the big release news this past 2 weeks (and year, probably). I love the articles about it and the Twitter meme threads (even the war crimes ones!). There’s a 🤩 lovely piece in the NYT on the history and lore of Zelda and why these games are so great. There are popup illustrations and videos for the references, it’s fab.

Here are a couple good pieces in Polygon - one on crazy constructions, and one on why the bridge physics are so amazing to developers everywhere.

Some key points in the press/tweets: if you take a year to polish, you get great results; if you don’t fire staff but retain them from game to game, you get better games and deepen the world; if your last game sold 29 million copies, you can do more.

“ChatGPT can pass college entrance exams, so why can't it solve Skyrim's easiest puzzle?” In PC Gamer by Livingston. This uses the somewhat-hard-to-install mod here. “It took me a couple hours of instruction-following, downloading, installing, updating, copy-and-pasting, and setting up a UwAmp server, not to mention a ChatGPT account (paid) to wire up OpenAI's talents to Herika and an Azure account (also paid) to add a text-to-speech system so the NPC companion could talk to me.” I don’t think I’ll bother?

The Rosebush: A new online journal for interactive fiction theory and criticism.

Narrative Design 101 - some articles on Medium by Johnnemann Nordhagen that pass the first sniff tests.

Radical Content Generation in Unexplored 2: A Substack post in AI & Games. There are links to related articles on the procedural generation of the maps/dungeons.

Narrative Research (& One Practice)

STORYWARS: A Dataset and Instruction Tuning Baselines for Collaborative Story Understanding and Generation by Du and Chilton. “We design 12 task types, comprising 7 understanding and 5 generation task types, on STORYWARS, deriving 101 diverse story-related tasks in total as a multi-task benchmark covering all fully-supervised, few-shot, and zero-shot scenarios. Furthermore, we present our instruction-tuned model, INSTRUCTSTORY.”

TaleCrafter: Interactive Story Visualization with Multiple Characters, by Gong et al. An attempt at managing the longer-form character-driven comic narrative problems. “This paper proposes a system for generic interactive story visualization, capable of handling multiple novel characters and supporting the editing of layout and local structure. … The system comprises four interconnected components: story-to-prompt generation (S2P), text-to-layout generation (T2L), controllable text-to-image generation (C-T2I), and image-to-video animation (I2V).” The animation part is slight. There’s a repo, so hopefully try-able soon.

TinyStories by Eldan and Li. Interesting: “we introduce TinyStories, a synthetic dataset of short stories that only contain words that a typical 3 to 4-year-olds usually understand, generated by GPT-3.5 and GPT-4. We show that TinyStories can be used to train and evaluate LMs that are much smaller … or have much simpler architectures… yet still produce fluent and consistent stories with several paragraphs that are diverse and have almost perfect grammar, and demonstrate reasoning capabilities.”

RecurrentGPT: Interactive Generation of (Arbitrarily) Long Text by Zhou et al. With demo. “Since human users can easily observe and edit the natural language memories, RecurrentGPT is interpretable and enables interactive generation of long text.” Their demo is for controlling a story generation system. The concepts are similar to things that other AI-assisted writing tools use for memory and prompt management and similar to ways that discourse managed narrative generation projects work. You get the instructions in a modifiable form for the human-in-the-loop scenario. (I added the cats, instead of plants. It didn’t really work with the idea of “collecting cats” in the final output, imo.)

Creative Data Generation: A Review Focusing on Text and Poetry by Elzohbi and Zhao. “This paper aims to investigate and comprehend the essence of creativity, both in general and within the context of natural language generation. We review various approaches to creative writing devices and tasks, with a specific focus on the generation of poetry.”

“I’m Making Thousands Using AI to Write Books,” by Tim Boucher in Newsweek. He is writing with ChatGPT/4 and Anthropic’s Claude and illustrating with Midjourney. He is on his 97th book. The books are quite short (2K to 5K words). He has only made a few thousand, we should be clear: “I sold 574 books for a total of nearly $2,000 between August and May. The books all cross-reference each other, creating a web of interconnected narratives that constantly draw readers in and encourage them to explore further.” Frankly, the interesting aspect of this scenario for me is the cross-referencing interconnected narratives, which is a very clever idea.

Data Science-y

Meerkat (github and wiki)- I think I’ve posted about this python lib for handling multimedia datasets in a pandas-like framework, but I really loved the demo of doing silver (rough-draft) labeling on PDFs this past week. And lots of us need a way to look at images inline too.

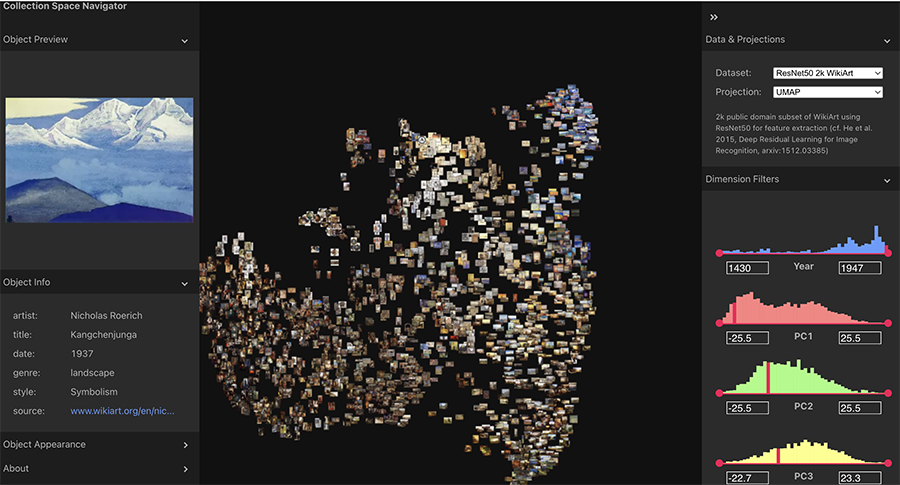

Collection Space Navigator, a nice multidimensional cluster-based image data explorer. Uses filters, tsne/umap/pca and metadata to allow you to explore visual collections. Wiki-Art demo, on the upper right for the mountainy pictures:

Related, Arize Pheonix, another 3D explorer of embeddings (code). (H/t Leland McInnes.) Will also do clustering explorations with HDBSCAN.

MS Guidance library, for prompt template help with handlebars — it looks smart. Some help with the JSON formatted returns here.

Observable Plot has tooltips/interaction now!

String2String algorithms lib for string operations from StanfordNLP.

Book Recs

The Ferryman, by Justin Cronin (sf). A strange society of small islands, in which adults are “reborn” at 100 years or so; the population lives in luxury on Prospera or as servants on the Annex. The art on Prospera is predictably bad. Conspiracy and rebellion are brewing.

Infinity Gate, by MR Carey (sf). On ruined earth, an African scientist discovers travel to other universes. Soon she runs afoul of the Pandominium, a multiverse police state. This book is a combo of multiple character threads, with military sf and good AI characters. But it ends precipitously as the first part of a series (be warned).

The Pharmacist, by Rachelle Atalla (sf). I read this dystopic underground bunker story because I am enjoying Apple TV Silo so much (and liked the books a lot). This was very dark and kind of depressing; people in power abusing people, people willing to be abused for a meal.

The Murder of Roger Ackroyd, by Agatha Christie (mystery). This is a Poirot classic, but the excessive “who was where when” mathematics and everyone concealing things, with relatively little character story, put me off a bit.

The Kraken Wakes, by John Wyndham (sf). Wyndham’s having a renaissance, and I must admit I quite like the writing style. This story involves a lot of indirect observation of events and guesswork. The message about climate change and the public reaction to glaciers melting is timely, as are the political blame-gaming and market collapse, and speculation about alien intelligences in conflict with ours. Samples of the wacky covers it has had and a good quote.

“Can you imagine us tolerating any form of rival intelligence on earth, no matter how it got here? Why, we can’t even tolerate anything but the narrowest differences of views within our own race.”

Game Recs

Zelda: Breath of the Wild. I have to play this before I can play Tears of the Kingdom, obviously. First I needed a Switch. I’m not far in, but I love exploring beautiful Hyrule and I really love the cartoony bad guys and their little icon reactions. (Fans: Don’t miss the NYT article I mentioned above in Game News and Furor.) I am reading tips online like “how to cook” because wow that’s not obvious! In my mid-month issue I linked to Stamen’s article on the Hyrule map design, and I was recently reminded how much I loved this project from Nassim’s Software:

Storyteller, the long-awaited template-filling game in which you slot in events and characters to fill out a story. It’s cute! People seem to be mostly saying it’s too short (here’s RPS’s review). I’m okay with short games. My primary critique is that I think more solutions should be allowed. There’s a bit of jiggling to see which characters will react the way the game wants you to play them. You can definitely have 2 female characters marry etc, but I think Snowy should also be allowed to love the dwarf Tiny.

No Man’s Sky: Still obsessed with it. I have acquired an A-class ship and I now also have a capital ship (freighter with another freighter to boss around; I won it after a dumb space fight in which I didn’t perform well but the captain nevertheless said “here you go”). I’ve maxed out my Tech Slots on my exosuit after a zillion Drop Ship expeditions. I have executed my first derelict freighter expedition. These freighters have random slime, automated weapons, and tainted metals to collect, along with logs saying something awful happened here. I am too smart to open the bio containment devices. Tip: To find these, buy “coordinates” from the scrap dealer in a space station. (You can’t save during the missions, be warned.)

TV Recs

★ Silo (sf), Apple TV+, ongoing. Based on Hugh Howey’s Silo book series, which I loved. This is excellent SF television! The setting is a community living in an underground silo of many levels; the outside atmosphere is poisonous. There is some kind of conspiracy of ignorance going on inside: old tech and historical “relics” (a Pez dispenser?) are illegal and the Judicial Branch seems to be pretty nasty. New sheriff nominee Juliette, a mechanical engineer, tries to find out why some people are dead and what they knew.

★ Ted Lasso s3 (dramedy?), Apple TV+. I wasn’t sure about last season’s dark turn, but this season has more than recuperated it. Lols and sentiment and great redemption arcs. Roy and Jamie! Jade!

Three Body (sf), the Chinese series. I acquired this with subtitles after hearing from a friend it was well-made (it is, and a bunch of money was spent on it including game and visual fx). There are 30 episodes; I’m around 12 in because I’m also still rewatching SG1. It didn’t need 30 eps—almost nothing happens in some—but parts of it are excellent. I’ll be really curious how the 8 episodes of Netflix’s version holds up in comparison and will report back.

Yellowjackets, s2. (Thanks to Jurie for reminding me it was on.) Well, that’s horror for you. No good can come of this and it’s not done yet. This season leaned into the wilderness-without-meds and PTSD psychosis. CW: Cannibalism.

Poem: “Watching My Friend Pretend Her Heart Isn’t Breaking”

On Earth, just a teaspoon of neutron star would weigh six billion tons. Six billion tons is equivalent to the weight of every animal on earth, including insects. Times three. Six billion tons sounds impossible until I consider how it is to swallow grief— just one teaspoon and one may as well have consumed a neutron star. How dense it is, how it carries inside it the memory of collapse. How difficult it is to move then. How impossible to believe anything could ever lift that weight. There are many reasons to treat each other with great tenderness. One is the sheer miracle we are here together on a planet surrounded by dying stars. One is we cannot see what anyone else has swallowed.

— Trommer Rosemerry Wahtola (h/t Joseph Fasano)

Welp, am I the only one kind of burned out from AI news right now? I bet not. I just want to play games and go camping. Writing this was much harder than usual. If you enjoy it and aren’t a paid subscriber, please consider it. I take off work (and boy did I this time) to write it up.

Best, Lynn (@arnicas on twitter, mastodon, and now bluesky I guess but it’s still shitposty)

Always an great collection of links and thoughts. Thank you!