TITAA #45: Haunted AI Houses Need Poets

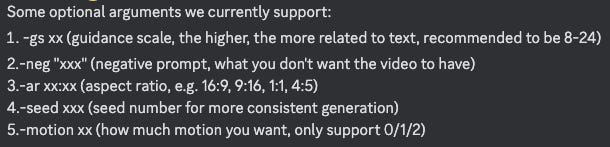

Cosmic Houses - Video Gen with Inits - Subnautica in VR - Llama2

I was planning to follow up on my mid-month intro article about the creepy MyHouse.wad game with some thoughts on liminal space, but then I read some things. First, Rose/House by Arkady Martine, a book that is a locked room mystery about an AI-run house. The house has no doors. The AI, which is called a “haunt” by the local police, asks wryly, “What is a house without doors?” (A prison, thinks the detective. NB, There are only very minor spoilers here.)

Prompts are important with this AI, who may or may not be sane. Only one person is allowed in, a former student of the architect who built it. The detective gets in to investigate the death by telling it she is an institution, not a person; she is a police department. In our world, corporations are people and vice versa, so this isn’t a stretch. We generally get the impression that the AI is humoring us. It has its own agenda; it is not social, it does not need company or to please people.

You might remember another insane house — Shirley Jackson’s Hill House. And that extraordinary beginning and ending, a loop of a labyrinth:

“No live organism con continue for long to exist sanely under conditions of absolute reality; even larks and katydids are supposed, by some, to dream. Hill House, not sane, stood by itself against its hills, holding darkness within… whatever walked there, walked alone.”

Echoed in some lines from Rose/House:

“Rose House, labyrinthine. In the non-light before dawn. There are soft footsteps in its hallways, across the floors of its halls and chambers; there are footsteps, no matter who is there to hear them. Rose House, singular, alone—turning in on itself.

I went to reread The Poetics of Space, by Gaston Bachelard. I had misremembered it as more about space than about poetics, but in fact it’s mostly about poetry. Bachelard focuses on the positives of aloneness and attics and daydreaming, spending less time on nightmares and hauntings. But the link is there in the cellars, which contain labyrinths. Labyrinths contain villains plotting. In MyHouse.wad they are explicit and horrifying. Bruce Sterling, on AI art (see below), says we’re in a labyrinth now, and there are houses to build there too. AI is nested dolls of houses. Which are alone—or are they. They certainly contain memories.

The connection of house and memory to narrative is commonplace. Rose/House “lends itself to recall, to a looping of memory.” And “A room is a sort of narrative when an intelligence moves through it, makes us of it or is constrained by it.” A poet of non-real spaces, Keith Waldrop, says in The House Seen from Nowhere, “From one window to the next the seasons turn round--spring flowers in the front yard while the kitchen gives onto ice and snow.” Some games—not just MyHouse.wad—are very good at bringing houses to life with memory narratives, and re-making haunted spaces: I recommend the classic Gone Home and the breathtaking but melancholy What Remains of Edith Finch — which almost hides itself entirely in the first puzzle, which is how to get in. You are not an institution. If you can recommend others, I’d like to hear.

Mark Danielewski of House of Leaves wrote a foreward to Poetics of Space in which he shares a calligram about cosmic houses and poets, which reminds us of Susanna Clarke’s Piranesi:

This brings me round-about to the image above: A cross-section of a haunted house made in Midjourney. I’ve made a lot of them. It’s a latent imagining of many houses in one. It has nonsensical geometry, like houses in dreams do, trees growing inside it, stairs that end nowhere. Is it all nonsense? Stairs that end nowhere were a feature of the Winchester House, like the lesser known Halcyon House in Washington, DC. Both houses were maze-like continuous architectural projects, which were meant to confuse ghosts and prolong their owners’ lives as long as construction continued. Both are thought haunted. Perhaps because of bad bones. Both have doors that open on blank walls, stairs that go nowhere, rooms contained in rooms. Like Hill House and Rose/House.

We have spent a lot of time on how badly these AI tools made hands and how walls don’t always meet floors properly. But how crazy are the unreal architectures we can produce with these tools? No crazier than dream architectures we see every night. Why aren’t there more poets and writers telling the stories found in these images? Asking questions like “what does it mean to animate a still life?” There is so much room in this cosmic house. Let’s build a house or a million inside the labyrinth. I hope you’ll join me down the corridor.

TOC (they will be links on the substack web page):

AI Creativity Tools (especially Animation, that SHOW1 thing, Other Procgen)

AI Creativity Tools

Stable Diffusion SDXL 1.0 is out! To run it, there are a bunch of associated needs (2 models, the first the base image model, the second the refiner/upscaler). Or you can try it via the ClipDrop tools. Support is built into the new HF diffusers release, including support for training and Controlnet (yay!).

WavJourney: Compositional Audio Creation with Large Language Models — this is interesting because it will create an actual narrative, in different voices, with sound effects. It makes some odd grammar mistakes (which you can see in the subtitling) but it’s very interesting nonetheless. It sounds much better than the bad intonation we’ve heard previously in experimental games (e.g., my newsletter on Kraken).

Cool interpolation method using Controlnet by Clinton Wang, to animate a path between 2 input images. (Via dreamingtulpa’s newsletter.)

⭐️ Bruce Sterling on the Art of Text-to-Image Generative AI (h/t Nick Montfort). This is a lovely and thoughtful piece about AI generation’s madness. He spends a good amount of time on the unthinkable, the unimaginable. How we will look at some surrealism differently now. It’s a generous and bemused piece. I especially loved the last paragraph:

The beauty of the world is the mouth of a labyrinth. This is beauty, which is not of this world and cannot be judged by the standards of beauty that we had earlier. But I know that this labyrinth is my doom. I don’t know how long I’m going to have to put up with this. I’ve been in the labyrinth of artificial intelligence since I first heard of it. I’m not too surprised that there’s suddenly a whole host of labyrinths. Thousands of them. I don’t mind. I know it’s trouble, but it’s kind of a good trouble. I don’t mind living there. I’ll build a house in the labyrinth. I’ll put a museum in it. You’re not going to stop me. I’m happy to accept the challenge. I hope you’ll have a look at it.

Video Generation Init-Off!

Everyone’s been waiting for init images for video generation (or, animated gif gen, which is what we really have). It’s here! In testing in 2 apps. The very concept of animating a still image with a necessarily imperfect model raises such interesting questions — what should move? the camera? objects in the scene? new objects entering the scene? the environment (weather, lighting?).

What would it mean to animate a painting? A still life? Abstract art? A sculpture? For the right explorers and poets, this is going to be a fascinating time. Especially before models become “perfect.”

Gen2 with image: Lots of posting volume the past 2 weeks (since AnimateDiff, which was in the mid-month newsletter 44.5) on using an init image, usually from Midjourney, in Runway’s Gen2. It’s still just making gifs (i.e., short animations of a single static scene) but it’s fun when it works. It does NOT always work. For more surreal images, it often produces no motion. They’re collecting feedback, and this is one of the categories you can check. The more realistic the starting image, the more likely you are to get movement. You can’t control how strongly it uses the prompt vs. image, which is a shame, so with an image init, you should skip the prompt entirely: this is what folks are mostly doing when they try to animate their Midjourney images.

⭐️ Pika Labs also just opened up their beta via a noisy Discord (many pings). You can make a 3 sec video using either an image init or a prompt or both. It allows you to retry. And you can control various things like guidance, which is great (and more than Runway offers).

In a comparison on heavily stylized or surreal input, I thought Pika’s Beta won hands-down. There are some weird and fun surprises in Gen2 output, but it’s much harder to control and tends to veer far away from the input image. In contrast, Pika Labs is almost too stuck on the input image, but this is often better. I’ll give you some brief comparisons and point you to my gifs page for more examples (update, no one complained, but you need to strip off the “source” part for that url to work: “https://github.com/arnicas/articles/blob/main/other_posts/ai_art/README.md#ai-art-illustrations”).

From an init image by magical realist Franz Sedlacek, Gen2 redoes the whole concept at the end although the start is good!

While with Pika Labs, I can ask for an animated ocean of mist and get some subtle nice results.

On my gifs page, I show more image inits and output differences. I’ll be adding to it. There are interesting ones of open doors, and vultures, and a bat. I’ll be animating still lives, too.

Onto other news in this space…

Blender Text-to-Video tools has a lot baked in, including text to video, audio, and image with various open models.

SHOW 1: The Simulation, formerly Fable, announced SHOW 1, a “show-runner” creation app demoed with a custom AI-created South Park episode. I guess they launched with an NFT-based simulated world concept, originally. Maybe not the best taste to do it during the writing and acting strikes? See the article in Venture Game Beat, and their kind of disappointing white paper (I said “wtf” a few times while reading it, it’s not actually a research paper). The motivation feels like a fandom itch thing:

“Not only do I want to be able to create new episodes of shows that I love, I’d like to be in shows that I love. I’d like to be in Star Trek as an ensign or I’d like to be in South Park,” Saatchi said.

They use a “multi agent simulation” much like the famous Park et al. paper on Generative Agents that I’ve covered. Because South Park scripts are in the data set for GPT4 already, they didn’t have to tune a language model. They did tune Dream Booth diffusion models on characters and backgrounds.

In order to generate a full south park episode, we prompt the story system with a high level idea, usually in the form of a synopsis and major events we want to see happen in each of the 14 scenes.

Since they did the image gen, upscaling, animation and other composition operations offline, I was ultimately confused about whether this was meant to be interactive — I guess in the future, is their suggestion. They hint provocatively about the tension between the simulation and the narrative. Overall, this feels like a big promissory note that is hiding a ton of work done on post production for animation, audio, and imagery.

Procgen and Other Art

This Blender medieval city builder tool by Joshua Börner is AMAZING. (h/t Alex Champandard). I’ll play with it on my August vacay which starts as soon as I send this. Note: “realistic appearance from a distance and appear less detailed and realistic when observed up close. Plus, the buildings do not include interiors, ensuring higher overall performance.”

See also “Differential Growth in Geometry Nodes in Blender” by Alex Martinelli.

The r/place for 2023 — one pixel placed at a time collaborative drawing project has a timelapse video. People really love country flags on these things.

Great procgen web art tutorials at Gorilla Sun. The latest is on blobby soft bodies.

Games & Narrative

Recreating simple Minecraft in Python with Ursina (video tutorial). I had not seen Ursina, it looks awesome. They say:

Easy to use mesh class for making procedural geometry

Built-in animation and tweening

Pre-made prefabs such as FirstPersonController, 2d platformer controller, editor camera

Lots of included procedural 3D primitives

Many shaders to choose from, or write your own with GLSL

Jon Ingold’s talk at Develop:Brighton on narrative in games was covered by Game Industry Buzz. Hmm, I have thoughts. But they will need to germinate.

Augmented Reality Space Invaders, a collab between Google and TAITO. It runs on iOS and Android on a phone, you use the camera to set up the 3D scene for the space invaders to invade.

⭐️ The Game Narrative Resource Sheet (Summer 2023), crowdsourced by AFNarrative. Lots of links to writing game narrative. There’s another document on this topic authored by crapcyborg here, updated June 2023.

My favorite from the open-source HuggingFace AI game jam won! (See last issue). "Results of the Open Source AI Game Jam".

Data Science & NLP

Llama 2, not technically open source but available for commercial use, is the biggest model news… thanks to Meta for sharing the weights. Here is Phil Schmid’s coverage, but it’s easy to find. Just take care in how you prompt it, it has secret formulae. Here’s an article and code for how to fine-tune it. The spacy-llm project has added interfaces to Llama 2 and Claude now. A few other models are also doing pretty well this past two weeks, including some giving GPT3.5 and 4 runs for the money (FreeWilly 2, for example).

Jupyter 7 includes a built-in table of contents feature, which, thank goodness. And interactive debugging.

A polars cookbook (based off a pandas one).

Videos in Stanford’s MLSystems talk series include a bunch of AI and ML topics.

Books

I’ve had a hard month, everything seems to be dragging book-wise? I got half way through the incredibly long Imajica and then took a break, and it impacted my reading volume.

The Spare Man, Mary Robinette Kowal (sf-mystery). This is a pretty entertaining mystery on a luxury space ship cruise, but I also felt it dragged. Maybe because so much on-going detail about the main character’s physical pain and disability after an accident years before. But if you like a kind of Nick and Nora-esque thing in space, there were good sf details.

Rose/House, by Arkady Martine (sf-mystery). I found this very thought-provoking (as I said on the top intro) but felt it was a bit unfinished? I had many leftover questions but not necessarily in a good way. I’m still deciding.

TV

I finished SG1 finally but might need another long series. I hate the wait for new eps of TV every week… which is what is going on with a lot of these:

The Afterparty s2 (Apple TV+). This remains very funny in its pastiches, as everyone at the murder scene tells their story in their style. Standouts have been a Jane Austen romance ep and a Wes Anderson filmette. It’s charming but in progress.

Hijack (Apple TV+). I wasn’t sure I’d be ok with this, but in fact it’s been a good watch — Idris Elba’s character is interesting, and the hijackers aren’t as expected. Totally unreal in the lack of people needing the toilet all the time, though. Also still in progress.

The Witcher s3 (Netflix). Still a load of fun even when I didn’t always understand why they were going where they’re going. This season wasn’t long enough.

Good Omens s2 (Amazon). I enjoyed it although the end was a gut twister. Watching David Tennant chew the scenery and swagger was top notch fun.

Games

I’m still playing Zelda BOTW all the time. Eventually I’ll get to Tears. Sometimes when I go camping in the lovely French countryside, which I’m about to do a lot of, I feel like I’m in green Hyrule. Where are the poets writing odes to Hyrule? Do I need to do it myself?!

Potion Craft: Alchemist Simulator (Steam). I really like the physical crafting where you grind plants and stir and puff a belows under the cauldron. I also like the map-based recipe discovery. My main dislike is the lack of places to find plants, but it’s in development! I do miss Strange Horticulture.

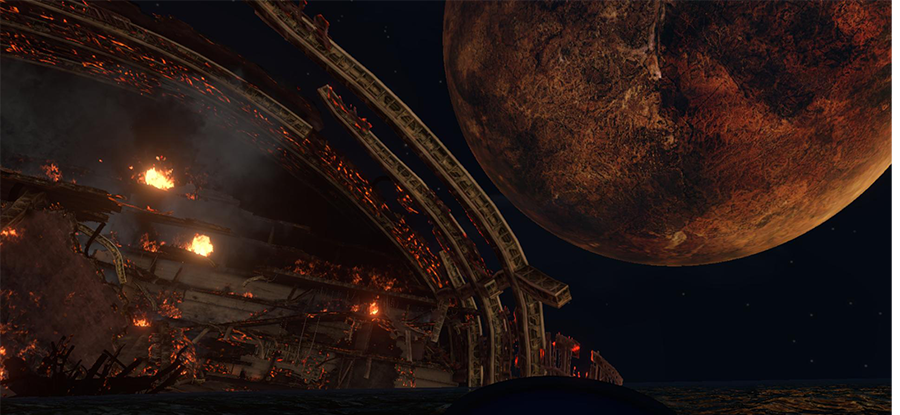

VR: I switched from compulsive No Man’s Sky in VR to Subnautica. Because it seemed like a related game, and it very much is! You’re crashed, and have to find materials underwater to repair your lifepod and build things to survive, all underwater. You discover blueprints as you explore wreckage.

Wow, it’s amazing in VR, if a little work to set up. To get the gamepad controller to work right in VR, I used a mod. If you need help doing it, comment/email me.

Swimming around feels like flying and it is beautiful in the reef shallows. But I am genuinely very creeped out by the dark, deep water that I can’t see into. It’s terrifying. Last weekend, while bobbing in the black water beside the burning ship under a giant red moon, I thought, “I can’t do it, I have to wait till daylight, it’s too fucking scary when I can’t see anything.” There are monsters in there.

Poem

The wind dying, I find a city deserted, except for crowds of

people moving and standing.

Those standing resemble stories, like stones, coal from the

death of plants, bricks in the shape of teeth.

I begin now to write down all the places I have not been—

starting with the most distant.

I build houses that I will not inhabit.This was surprisingly hard to write, probably because I need to be on vacation reading and playing with stuff. I want to make a lot more animated gifs out of impossible artworks. I want to read more poems about houses. I want to learn some Blender. I need to listen to a lot more Weird Studies. I hope you are enjoying your summer, and staying cool and well-read.

As usual, drop me a note or a comment, let me know you liked this!

Best, Lynn (@arnicas on twitter —still argh, mastodon, and bluesky)

Enjoy your August vacation!

Hope you enjoy some good downtime. Really interesting newsletter as always. Did you see the Orion article- We Tried to Create AI Fairy Tale Art. Results Were Mixed. https://orionmagazine.org/article/ai-image-generator-fairy-tale-art/

I like your haunted houses! 🌸💚