TITAA #41: Spirit Scripts and Repeating Stars

Magical Scripts - NeRFs Gone Mad - LLM Woes - Stargates - Cities & Revolutions

I learned about the Atlas of Endangered Alphabets a couple months ago during a funding drive they did, tweeted by friend Jacob Garbe. The project members said in their blog post that they had discovered and documented amazing writing systems, including:

An African script based on colors

A divinatory script that incorporates the pawprints of jackals

Several West African scripts that involve symbols embroidered, printed or painted on fabrics

And, perhaps most remarkable of all, a sacred Indian script that is recited aloud while being written in chalk letters on a slate, then the slate is washed with turmeric water and the devotees drink it, thereby incorporating the divine letters and the spirits they represent.

Indeed their alphabets directory is full of fascinating folklore and histories about the creation of the writing systems. Medefaidrin “is one of more than a dozen examples of a ‘spirit script’ – that is, a writing system based on symbols or characters revealed in a dream or vision.” Of a Philippine islands script written on bamboo: “Historically, young Hanunuo men and women learned the Hanunuo script in order to write each other love poems.” And I learned about boustrophedonic writing, in which lines can be alternately left to right or right to left, because of the entry on Lontara; which also describes a language that has 5 genders including “a gender that embodies both male and female energies, and is thus revered as mystical and wise.”

There is a lot on their site about colonialism, genocide, religious persecution, and indigenous script creators; it’s a totally fascinating read. With lots of images. The script in the header image, Ditema tsa Dinoko, is a modern invention by linguists and designers: “The script is based on the traditional symbologies of southern Africa, which are still used today in certain contexts, such as in Sesotho litema or IsiNdebele amagwalo murals, the knowledge of which is traditionally kept by women.”

This month’s wrapup includes a little bit on beautiful font generation, new image generation models (SDXL, Dalle, Firefly), a ton of 3D research papers, and a lot of open source LLMs trying to provide alternatives to OpenAI but struggling with a DMCA. If you like these updates, please tune in for the new mid-month newsletter which is paid-subscriber only, which allows me to take the time to keep up better. Read online for these TOC sections as links:

AI Art (New Models, 3D and NeRFs, Video Gen, Misc Creative)

AI Art

I covered the excellent Midjourney v5 in the new mid-month newsletter, and now we have the improved DALL-E, Stable Diffusion “SDXL,” and Adobe Firefly’s beta, all in testing or preview form. I think Midjourney V5 is the winner, but still lacks web site generation and an API, which are ongoing annoyances.

The new DALL-E is usable from Bing, and is actually quite good. (And btw, I really like Bing Chat’s help and links.) Two of my “difficult” prompts, “a penguin with a carnival mask beside a canal in Venice” and “an asteroid hitting a space ship in a nebula, science fiction film style of Ridley Scott”:

Stable’s SDXL is not as good at these prompts, but it's a nice model and I like their UI improvements. It feels to me a lot more like MJ 4, which is interesting. (You can find it in the beta Dreamstudio site and it’s rolling out elsewhere.)

Adobe’s Beta Firefly is not as good at this kind of content generation, and suffers from simplistic vocab blocking. “Strike” and “hit” are not allowed even with asteroids. The UI is nice though, a bit like the new Stability beta web ui. I played with the font-style generation and was more entertained. It’s not like other font-style-generation I’ve played with (on internal tools), more of a kind of masking and structure situation?

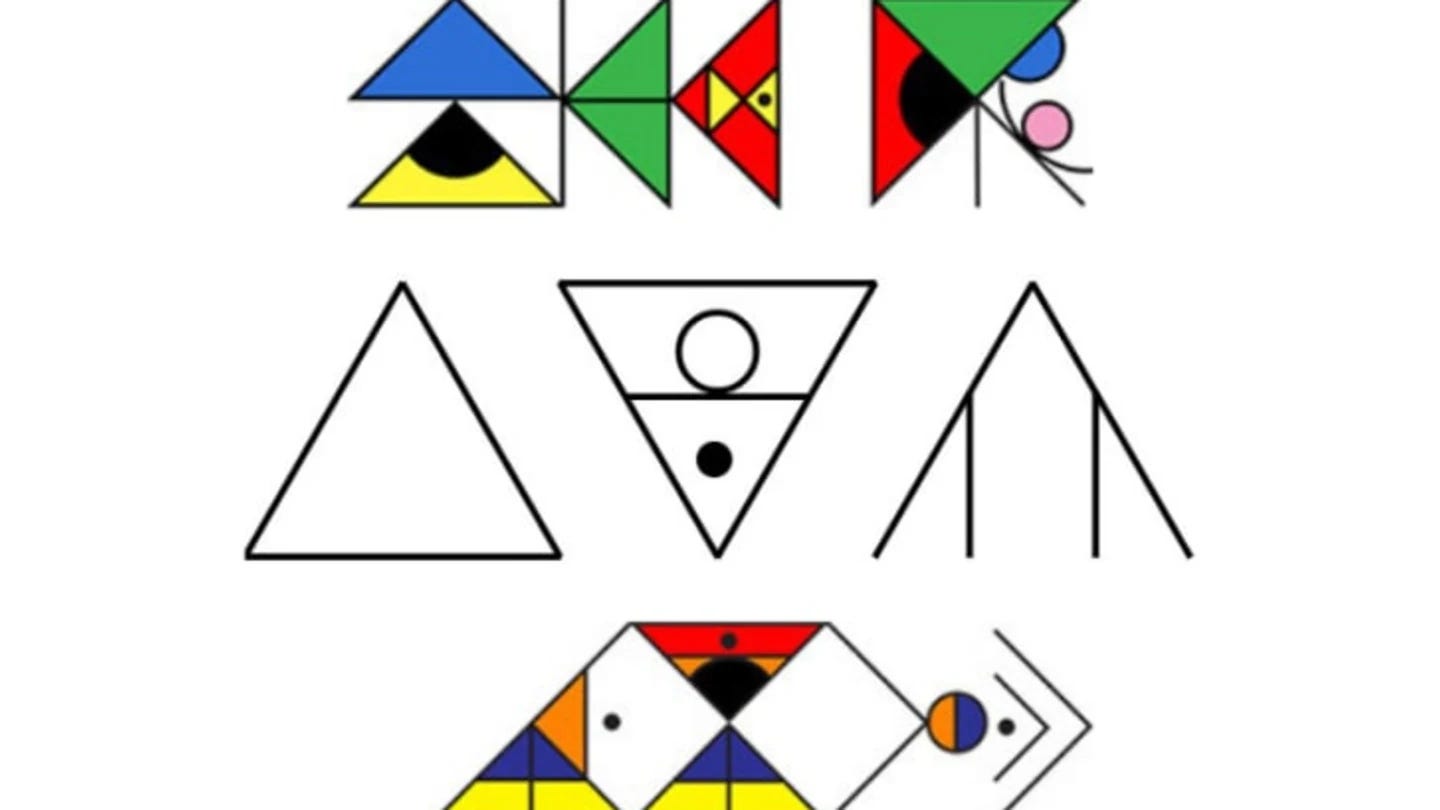

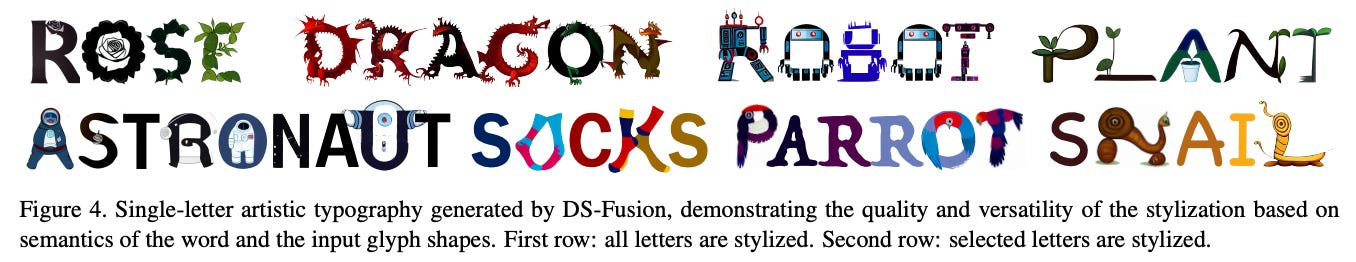

There was a great font-style paper 3 weeks ago (in newsletter #40.5, but breaking: Word-As-Image demo now up!), and then this DS-Fusion project came out which I love:

They take a glyph (a letter) and a style prompt (text) as input, and use a CNN discriminator with a diffusion model to combine the two in a well-formed output (“discriminated stylized diffusion” == DS).

3D and NeRFs

NeRFs, which I’ve talked about before, are/is a method of generating a 3D shape from images or video. There has been a ton of paper activity with NeRF in the past 2 weeks, many doing the same things. Here’s a sample.

Architecture: Text2Room: with code, by Höllein et al. I love their trippy little videos.

Urban: GridNERF (no code): large urban scene 3D generation. S-NeRF: Neural Radiance Fields for Street Views. Requires LIDAR data as a support method.

Editing NeRFs: Instruct NeRF-to-NeRF: Editing 3D scenes with text. Code will be in open source NerfStudio. Also Vox-E, which says code is coming too. Also SINE, which also says code coming soon. And here’s another editing model approach via sketching, SKED.

VMesh for efficient scene representation. Smaller: “a hybrid volume-mesh representation, VMesh, which depicts an object with a textured mesh along with an auxiliary sparse volume.” Also NERF-Meshing, for better extraction of meshes.

Compositional 3D Scene Generation. Code coming soon. From a voxel-based layout and prompts, generate a (blurry) 3D scene. This is the 3D version of various other sketch-based compositional diffusion generators. This looks a lot like Set-the-Scene at first glance. Loosely related: Instruct 3d-to-3d.

DreamBooth 3D from Google (Raj et al.), generate a 3D model from a few training images; so, no code, but someone external will implement it soon. 🙃 Also see Make-It-3D.

F2-NeRF, buttery smooth camera trajectories! Take a little while to run, though. Another one with smooth animation, Localrf. I’m excited to see code (not yet) for DiffCollage, which will do long (wide) realistic images, 360s, and inpainting. The videos look amazing.

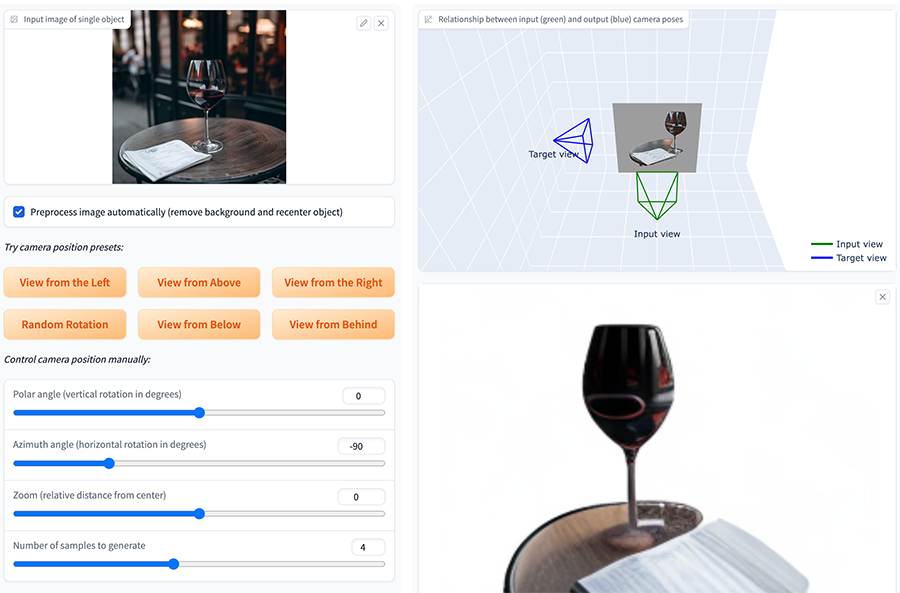

Try the Zero-1-to-3 demo on Hugging Face (project page). From an image, generate 3D and new camera angles on the object. It works best to remove the background. Not NeRFs, but cool.

Series A funded LumaLabs.ai released an API and will make you a 3d NeRF for $1, given video. You can fly your camera around inside it and generate a little video, and download various model-related artifacts. I had a link to one I made with their tools in last month’s newsletter.

🎥 “Given Again” by Jake Oleson is a Runway AI Film Festival eerie short that features tons of interesting 3D effects (zooming, panning) and NeRFy goodness on still clips.

Video Gen

It’s all pretty nascent and crappy quality for now, apart from a few using good tricks. This infinite zoom video maker from kadirnar is fab fun. I uploaded my results to social media. This is a lovely Arthur Rackham style story book forest zoom, posted by me on Mastodon, and here’s a mandala-y space portal thingy inspired by Stargate rewatching.

Text2Video Zero is out with code. The results generally are small with watermarks, and it doesn’t do story-related scenes yet. Also, you can fine-tune text-to-video with diffusers.

Gen2, Runway’s text2video, is in limited testing.

The Runway AI Film Festival had some interesting winners. Checkpoint is a well-illustrated look at AI art and human creativity from Áron Filkey & Joss Fong (I assume the same now-AI-famous Joss Fong?). Sam Lawton’s Expanded Childhood takes a simple idea, out-painting on childhood photos, and leans in on the idea of confused memories with an excellent sound track.

Misc Creative Links

I headlined with Amy Goodchild’s ChatGPT-3 renditions of Sol Lewitt’s wall drawings a month ago. She redid it with GPT4 (thanks, Kerry, for the pointer) and a few got better and a few worse. But she’s still funny.

High-res textures for rendering Townscaper models in Blender, instructions thread.

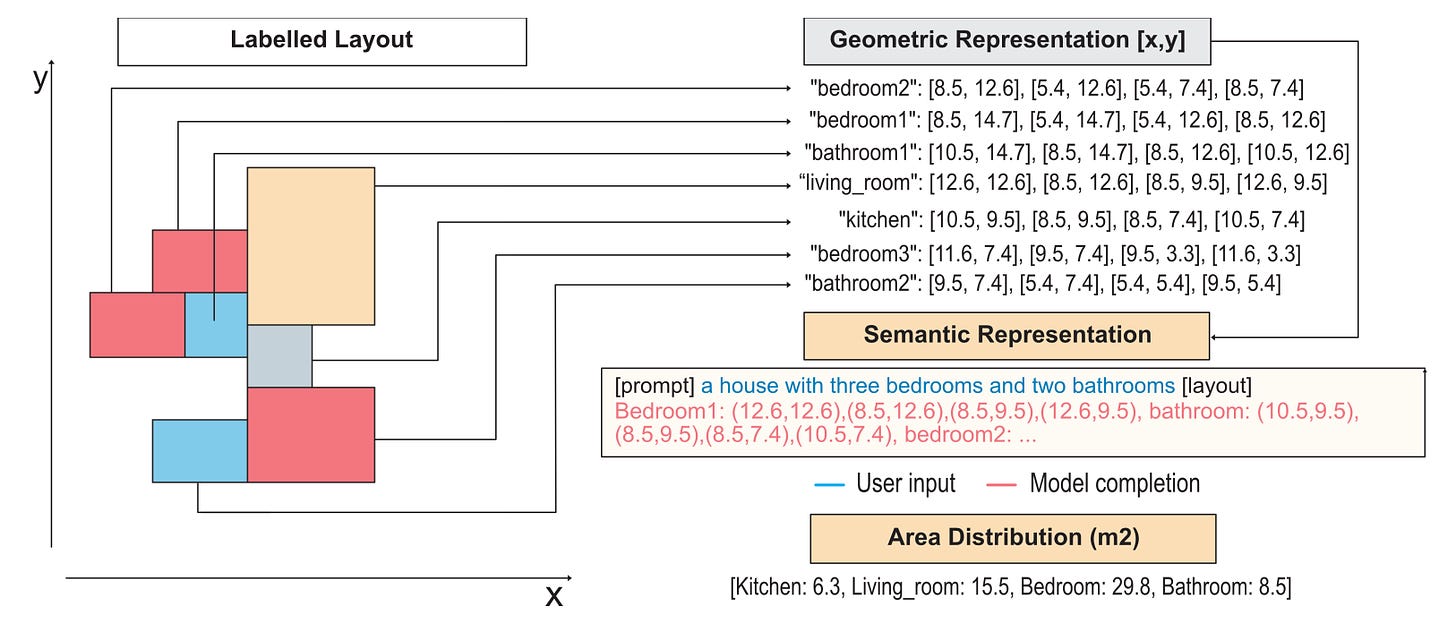

Architext (Galanos et al), an architecture generation paper and model. Architext’s labeled dataset joins natural language prompts with annotations specifying layout (a process than can be done for lots of creative model tasks but is a shitload of work):

Train Your Own Controlnet With Hugging Face Diffusers guide.

Ablating Concepts in Text-2-Image (code)… remove a thing from the image, or a style, like remove “Van Gogh” from a starry night to get a regular starry sky.

Code from Claire Silver to make a little side-scroller video environment combining P5.js, Midjourney images, RunwayML, and GPT4.

Copyright: Foundation Models and Fair Use, paper by Henderson et al (Stanford folk). Also this useful foundation model graph/database project for understanding the models and datasets and their relations.

“Artificial muses: Generative Artificial Intelligence Chatbots Have Risen to Human-Level Creativity,” paper by Haase et al. They tested with the “alternative uses” test, asking for “generation of multiple original uses for five everyday objects (pants, ball, tire, fork, toothbrush), which can also be called prompts.” Only 9.4% of the humans were more creative than GPT4, which was the most creative model tested.

The Prompt Artists, a paper by Chang et al at Google studying text2image artist prompts. “We find that: 1) the text prompt and the resulting image can be considered collectively as an art piece (prompts as art), and 2) prompt templates (prompts with “slots” for others to fill in with their own words) are developed to create generative art styles. We discover that the value placed by this community on unique outputs leads to artists seeking specialized vocabulary to produce distinctive art pieces (e.g., by reading architectural blogs to find phrases to describe images).”

A Catalog of AI Art Analogies by Aaron Hertzmann, this is good.

Games & Narrative

Fantastic looking 3D city builder in dev, Bulwark Falconeer in PC Gamer.

I really enjoyed this Wired story about Cart Life, which sounds like a miserable game but “arty” experience.

AI tool integrations with Unity from keijiro: ChatGPT AI Command and Apple Core ML Stable Diffusion. Also Unreal getting in the game: The start of some GPT utilities for UE5.

“Collaborative Worldbuilding for Video Games” excerpt from Kaitlin Tremblay’s new book. Snippets: “The game’s world should strive to be believable rather than realistic.” and “Engaging and compelling worlds don’t fill in all the details; they provide just enough to understand the world, but not too much where there’s no room to let the audience’s imagination run a little wild.”

Procedural Content Generation in Games ebook from 2016 is back online.

“Getting Started in Automated Game Design,” a video overview by Mike Cook.

This was a little polarizing on my social media: “UBISOFT GHOSTWRITER’ WILL GENERATE NPC PHRASES AND SOUNDS” but Liz England who saw the talk at GDC said it was done in connection with writers and was quite excellent. (I also rec all her GDC tweets of talks.)

Narrative

M-SENSE: Modeling Narrative Structure in Short Personal Narratives, a paper by Vijayaraghavan and Roy I missed in Feb because of open tabs. There’s a new dataset of personal narratives from Reddit.

Can an AI Program Write a Good Movie? Fluff piece in the Guardian, but still.

Marvelous Things: an essay by Merve Emre about Italo Calvino’s themes in The New Yorker, found in an open tab. So extraordinarily prescient about a time of LLMs, narrative generation, algorithmic recommendations, reading and making up facts. In Invisible Cities, Marco Polo invents histories (hallucinates?) for Kublai Khan, having no knowledge of Asian languages.

At times all I need is a brief glimpse, an opening in the midst of an incongruous landscape, a glint of lights in the fog, the dialogue of two passersby meeting in the crowd, and I think that, setting out from there, I will put together, piece by piece, the perfect city, made of fragments mixed with the rest, of instants separated by intervals, of signals one sends out, not knowing who receives them.

About If On a Winter’s Night a Traveller, a book that is about all the books anyone could ever want, Emre says,

“It is the unfinished and unfinishable book; the book that is the counterfeit of all counterfeited books, their double and their negation. The peculiar inventiveness of the novel lies in the peculiar uninventiveness of the novels within it. Are they mass-produced imitations or originals? Good or bad? How can anyone tell the difference?”

NLP

I’ll focus on the open source and performant LLM news of the past couple weeks, cuz there is just too much going on, especially since the LLaMa/Alpaca news. Everyone wants an OS competitor to OpenAI (for many many reasons). You might also find this Stanford-project of showing relationships in graph/table form for LLM models and datasets useful.

I covered the first LLaMa derivatives in the mid-month newsletter. Some recent big models are based on it, but it seems Meta is aggressively DMCA-pursuing LLaMa-based repos right now. GTP4All from Nomic, intended as an open source GPT4, says on their repo they are training on GPT-J now to avoid the LLaMa issue. I don’t know if Vicuna (chatbot) or ColosallChat from ColossalAI, also based on LLaMa, have similar plans.

Non-LLaMa Models:

CerebrasGPT, competitor open source approach to a GPT4. “These models are the first family of GPT models trained using the Chinchilla formula and released via the Apache 2.0 license.”

ChatRWK, “like ChatGPT but powered by RWKV (100% RNN) language model, and open source.”

Crumb’s Instruct-GPT-J 6B tuned on Alpaca instructions. Dolly is similar, but the authors note that Alpaca dataset is CC Non-Commercial and so is Dolly.

OpenFlamingo (9B for now) from LAION, an open source alternative to DeepMind’s multimodal Flamingo. These models allow you to interleave images and dialogue/questions, for e.g. captioning or recipe generation from images.

Lit-LLaMa is Apache 2.0 licensed, from Lightning AI. Independent of the LLaMa weights/model.

Btw, I tried Anthropic’s Claude’s online demo and was very impressed but am not sure how to get access.

This is (sadly) LLaMa news, but it’s creative output evidence: fine-tuning it to sound like Home Simpson with Replicate.

More Tools & Papers & A Job

ChatGPT Outperforms Crowd-Workers for Text-Annotation Tasks, paper by Gilardi et al. I don’t have a comment yet except it makes some jobs cheaper/easier and eliminates other low-paying jobs.

Mordecai 3: A Neural Geoparser and Event Geocoder, paper and lib by Andy Halterman, update to Mordecai 2. “The system performs toponym resolution using a new neural ranking model to resolve a place name extracted from a document to its entry in the Geonames gazetteer. It also performs event geocoding, the process of linking events reported in text with the place names where they are reported to occur, using an off-the-shelf question-answering model.”

Transformers.js - run HF transformers models in your browser!

💻 Sponsored Job (my first!): “Early-stage tech venture aims to democratize the U.S. legal system through an ontological-based engineering approach to bring reliable generative AI to the legal domain. Seeking an NLP engineer for training and deployment of language models and the blending of structured data and language models. Remote, competitive. Contact don@syllogize.io about the position.”

Books

The Theory of Bastards (sf) by Audrey Schulman. My fav read of the month, but tough going. A woman who studies sexuality joins a research institute to study bonobo monkeys in captivity. She herself has a backstory of long-term crippling pain from misdiagnosed endometriosis, which forms a backbone plot here. It’s a world with embedded networked tech everywhere; and then a duststorm knocks out the power. Hard to describe, harrowing, “literary” sf.

Antimatter Blues (sf) by Edward Ashton. This is a sequel to Micky 7, which I really liked. It’s not quite as tight as the previous book, but if you like those characters and the critters, this is a good read about making deals to survive on hostile worlds, with hostile management.

The Destroyer of Worlds (fantasy) by Matt Ruff. A sequel to Lovecraft Country, which I loved. Ditto exactly what I said above: if you like these characters (I love them), it’s a good read, but it’s not quite as entrancing as book 1.

Observer (sf) by Robert Lanza & Nancy Kress. I guess this is a theoretical physics story with help from an SF writer. The prose is still pretty pedestrian. I rec mainly for the many-worlds physics, especially if you liked the show Devs? but there are some loose ends here, too.

The Curator (fantasy) by Owen King. Good writing, slow start, pretty horrific. After a popular social revolution (not very well organized), a young woman who was a domestic servant takes over the running of the “Museum of the Worker,” full of weird wax figures. Meanwhile, next door are a burned institute of psychical studies haunted by cats and a former embassy now occupied by a serial killer/torturer. Lots of gore and social woes, a dose of magical realism. (CW: cats and people are killed.)

TV

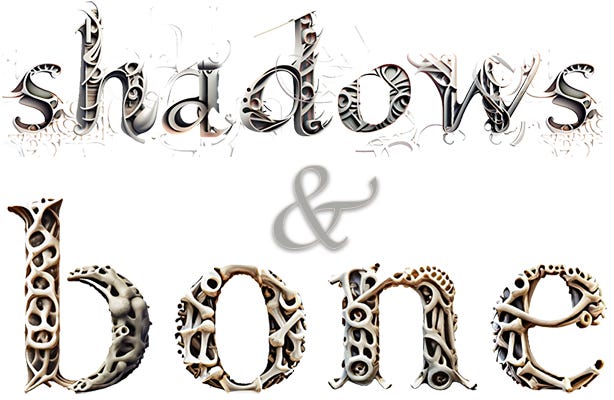

Shadow & Bone, S2 (Netflix). This is much more solid than S1, with the bad guy still disturbingly sexy, the Crows being fantastic, Mal seeming more likeable, and the addition of wonderful new characters. I binged it in a couple days.

Carnival Row S2 (Amazon Prime). Much darker and gorier than S1, there’s a winged monster sowing discord and bodies and a revolutionary group called the New Dawn who have dubious methods. Lots more racist white men with beards shouting about the evil dirty fae. Some deaths I did not expect.

Book of Boba Fett (Disney+). I watched this because I had been alerted it was needed before Mandalorian S3. It was fun! I liked the wild westy, criminals-of-Tatooine plotlines, and when Mando showed up in the last few episodes, I was psyched. The ep with Luke was a gift, frankly. After it was over I was quite bummed having too little SF to watch and started rewatching Stargate SG1 and obsessively playing No Man’s Sky in VR.

Games

The Independent Games Festival Grand Prize award winner, Betrayal at Club Low, is very—different. I haven’t played it for long, but it you want a puzzle game where everyone is a low-poly cool character vibing to the beat and your character carries pizzas and rolls dice, this is for you. It sure has attitude.

I’m desultorily playing a lot of things, including Deliver Us Mars, the sequel to Deliver Us the Moon which I really liked. Deliver Us Mars is a lot harder, to its detriment; why don’t all games come with “ok, you can skip past this puzzle-leap-jump-shoot-climb if you’ve tried 100 times.”

VR

No Man’s Sky - I’m playing this a lot right now, more than I had before. They updated the VR a bit, which now has lots of helpful UI and I’m able to craft and achieve achievements. It still has some bugs: like screens appear behind me, so I spin a lot, which I call “exercise”; and space combat is prohibitively difficult with VR controllers. But I play in VR for that sense of awe I get in a truly alien world. I am mildly irritated that all the space stations are identical, but I am still entertained by the universe. There are even little Stargates, plus:

Walkabout Mini Golf VR. Ok, ok, it’s not bad! I resisted for a long time, then the spring sale hit and I caved. The golf courses are nuts. One does improve, too. Fine casual play!

Poem: “Timepiece”

To see in the punctured dust the sow bugs clocking These constellations of buds or beetles time us More than the cocks do, More than the winding tides. And the ants sharpen their spheres, And the stars, their spiders: The sky's spider turns: Never you left your acorn place For nightly signs and wanders For ants like meters, For the repeating stars. by Rosalie Moore (1949) via caconrad88

Hope you made it this far! I am at that time of year where I start wanting to do nothing but sit at cafes in the sun with a spritz and a good book. Also, travel. I hope the workload lightens up to allow this.

Stay well, and thanks to those of you who upgraded to paid!

I fear you haven’t left anything for me to discover on my own.

great news letter very creative