TITAA #42: Liqueured Up in the SciFi Wild West

Alchemical Recipes - Sound & Vision - Living Diagrams - Anxious Agents - NLP

The monks of the Grand Chartreuse in the Alps make their famous green liqueur according to a recipe from a mysterious alchemical manuscript. A key ingredient in lots of cocktails, the formula is a tightly held secret. The story goes that in 1605 the Duc d’Estrées gave the Carthusian order a manuscript for a healing liqueur conferring long life, never revealing where he had gotten it. It was so complex, requiring 130 plants and roots, that it took their apothecary until 1737 to formulate a feasible recipe. The “élixir végétal” now sold in small bottles inside wooden cases is closely related to this one. The liqueurs came three decades later.

The distillery site has detailed history. I also watched this French video and in it they refer to the manuscript as a “grimoire,” if I’m not mistaken, which is a book of magic! In any case, the secret recipe is guarded by only 2 monks at a time. Nowdays they are aided by modern computer tools as they control and monitor the distillation, shown in the video. Locals help gather the plants, if I followed; but I suppose with 130 components, in mysterious proportions, the secret recipe remains quite safe.

Chartreuse is in the news because the monks have been told to scale back production and refocus on prayer, in part for environmental impact reasons. I lol-ed at the NYT article on the disturbing shortage, though:

“I stand with the monks,” said Tony Milici, the bar director at Rolo’s, a restaurant in Ridgewood, Queens. “But I am also responsible for running a sick beverage program.”

There is a good interview with a fellow (Gröning) who was allowed to film the monastery in 2006, after waiting 16 years for permission. Apparently some monks don’t stay, which isn’t a huge surprise given they have little time to themselves, own almost nothing, and don’t sleep through the night. He estimates 80% of the novices end up leaving and others are sent away as poor fits. Between this turnover, and the computer network they use, and this crazy article about corporate espionage I just skimmed… welp, let’s just say I’d read or watch a thriller about trying to get a secret liqueur recipe out of a monastery in the Alps.

Onto the AI… and a ton of great reads this month.

TOC (links in the web view):

AI Art Tools (Video & Sound, 3D/NeRF, Other Fun)

Books (a ton)

Game Recs (well, one: No Man’s Sky)

AI Art Tools

DeepFloyd “IF” is out, but weights only for research. Famous for being much better at text rendering! Back when I was writing about Peng Shepherd’s The Cartographers and fake towns on the map, I tried to generate pics of a road sign pointing to the town of “AGLOE” and couldn’t do it easily. Now I can! (It gets more unhappy when I ask for “AGLOE, NY” though. HF demo, does not render on Safari.)

🚀 📱 Speed Is All You Need, paper from Google folks running SD 1.4 on a Samsung mobile phone, very fast (under 12 seconds). Needs a GPU-equipped device. Also, this new project, MLC LLM, for running locally on mobile and laptop.

IconShop - generating SVG icons with text, code coming soon! Includes text + icon combo infilling and alterations.

Video & Sound

Advance clips of Adobe’s video generation tools in Firefly look promising. It’s a bunch of AI assistance for lots of parts of the video creation cycle, not just clip generation… storyboarding and pre-visualization, clip relighting/editing, animated text effects, search, etc. One lesson I see here is the value of product thinking wrt AI tool use; get a lot of people who know how to do user research for pain-points and opportunities, make UI, and build product. It’s not just about model training (unless your business is solely selling models).

I’m not …. a huge fan of the open source or Runway GEN2 text2video examples I’ve seen, tbh. The OS model watermarks bug me, and GEN2 seems like a high quality gif generator in that you can’t string camera actions/scenes together in a prompt yet. I see that some folks are having a lot of fun using GEN2, though; if you want to see examples recently, try this by TomLikesRobots and this Alice one by Merzmensch (for more chaotic simple fun, this by IXITimmyIXI is emoji-to-gif which I do appreciate :). In contrast, this Unreal Engine 5 render from Bilawal is terrific.

That said… there have been some really interesting research and tool-based releases for video and video-related audio stuff.

Soundini - this is wacky in a good way. Sound-guided video editing. Give an audio sample of a cracking fire and it will add a lit fire to a scene. I don’t know if this is how I want to edit video, but I’m up for trying it! (No code yet.)

Generative Disco, a system to generate music videos from music clips, by Liu et al (Columbia and HF). “From a music clip (depicted as a waveform), the system guides users to generate prompts that connect sound, language, and images. A pair of start and end prompts can parameterize the generation of video clips.” The video generation aspect is pretty limited in content (due to current SOTA limits), but this is a solid 20 page paper with system design UI and user study. If you’re into music videos, read!

The cherry picked examples on this diffusion-based video editing work look good: Align Your Latents, work with NVIDIA and SD folk.

An algorithm for fast, efficient frame interpolation, AMT by Li et al. “It aims to provide practical solutions for video generation from a few given frames (at least two frames).”

Track Anything, based on Meta’s OS Segment Anything, which was in my mid-month newsletter: Track objects thru video frames, for inpainting or editing out (e.g., the Marvel superheroes). Same folks (Jinyu Yang et al.) just released code for Caption Anything (see also issue 41.5) which uses ChatGPT and Segment Anything to caption parts of images… I had seen this but was just now struck by how cool their example is trying it on a Chinese painting, “Along the River During the Qingming Festival.” Art captioning is very hard. You can see a small discrepancy in this extract from their gif here — at the end, one character is referred to as a man and as a boy in different captions.

Bark rocketed to fame for text-to-speech generation because of Jonathan Fly’s fab experiments (“why is six afraid of seven?”) extending generation into randomness. It produces weird stuff Max Headroom-style, and we all know the glitch is fun. Jonathan’s modified code is here. I have played with the original and am pleasantly creeped out by the random array of voices it has.

Tango (code) will do audio sound fx generation: “TANGO can generate realistic audios including human sounds, animal sounds, natural and artificial sounds and sound effects from textual prompts.” I recently needed something like this and couldn’t find a usable one (but this is CCA-NC 4).

3D & NeRF

Blockade Labs users and devs are making inroads on plugins for Unreal, depth maps via API, NeRF stuff with LumaLabs, and other fun. I suggest you follow ScottieFoxTTV if you want to keep up (and aren’t on their Discord).

Mostly with no code yet, but:

Patch-based 3D Natural Scene Generation from a Single Example, code Mid-May? Love me a landscape generator. Next newsletter!

Avoiding the 3-heads on a corgi even if cute “Janus” problem of Stable DreamFusion here (code also coming).

HOS-NeRF: “HOSNeRF, a novel 360° free-viewpoint rendering method that reconstructs neural radiance fields for dynamic human-object-scene from a single monocular in-the-wild video.”

TextDeformer - geometry mesh manipulation by text, work from Adobe and others.

Total-Recon code exists! Given a video (from a “handheld RGBD sensor”), build a new scene from a new viewpoint (like a dog’s).

Other Fun

🎓 Tips on training generative models from John Whitaker. Pre-train on lots of data, fine tune on high quality data, plus other tips.

There’s an interesting collection of digital works on solar power and eco-tech up at Solar Protocol’s Sun Thinking. I especially like Everest Pipkin’s html-based interactive fiction piece and Allison Parrish’s solar-powered poetry.

What is AI Doing to Art? Article in Noēma covering photography’s copyright fight and labor issues.

🎓 Text-guided Image-and-Shape Editing and Generation: A Short Survey, Chao and Gingold.

🎓 A Primer and FAQ on Copyright Law and Generative AI for News Media by Quintais and Diakopoulos.

Games News

The Kraken Wakes. Game on steam with only 5 reviews, using a live LLM. “The exclusive interactive adaptation of John Wyndham's epic sci-fi/horror novel, featuring ground-breaking conversational gameplay… The Kraken Wakes has been created on Charisma.ai’s AI-powered conversation engine. You’ll speak to the game’s characters, and they'll listen and respond to what you say. There are no multiple-choice options here: you’re free to say what you like, and find out how your words will impact the story.” Reviews cite many hangs, crashes, and difficult to understand voice synthesis. But not all bad, either. I’ll report back on this later. :)

Ethan Mollick giving short stories to GPT4 and asking it to turn them into games. (Just showing it, not endorsing per se except I too would like to do this in a sensible way? Maybe better than the Kraken.)

🎙️ A podcast conversation about deep simulation in Dwarf Fortress and Caves of Qud, via PC Gamer. Ooohhh. And differences in design intent in the two games. The anecdote about players being able to see why there is blood on a staircase in DF (by going up levels) was great.

Put Unity Games into Hugging Face spaces (good grief! but hey, cool demo option).

There was a bunch of not awesome news in games media this week, including the shutting down of Vice’s games crit department (related, Samantha Greer on writing for games media and being totally broke), and a “bad AI” implementation in a game—it’s not really AI and does look bad. This latter is a real warning to do it right, I think, in that it was purportedly “using Natural Language Processing (NLP) to try and link the player’s input to the correct or desired answer.” One comment: “Zork (1977) had a better understanding of what your commands meant.” Raj Ammanabrolu pointed out that he had done 2 papers (arxiv.org/abs/1909.06283 and arxiv.org/abs/2001.10161) four years ago that predicted this with research into what players and devs want in augmenting text-based games.

The Videogame Industry Does Not Exist: Why We Should Think Beyond Commercial Game Production, open book by Brendon Keogh, that looks at labor of indie and fringe game creators, with lots of interviews and financial precarity. We really need UBI.

Adding ChatGPT to Skyrim NPCs might make it even more entertaining to be a tourist in that world (but not out yet and terrible speech synth imo). As you know, I like big open world tourism. I’ll trial that mod when it’s ready.

Narrative and Agents

Why so much Big Bang Theory?

“Hi Sheldon! Creating Deep Personalized Characters from TV Shows.” A task and dataset and baseline attempt. “Imagine an interesting multimodal interactive scenario that you can see, hear, and chat with an AI-generated digital character, who is capable of behaving like Sheldon from The Big Bang Theory, as a DEEP copy from appearance to personality.”

SkyAGI: “SkyAGI implements the idea of Generative Agents and delivers a role-playing game that creates a very interesting user experience.” “SkyAGI provides example characters from The Big Bang Theory [again?] and The Avengers as a starting point. Users could also define customized characters by creating config json files.” Refers to the generative agents in LangChain I mentioned in #41.5.

In the genre of understanding how to work with LLM models as agents, we have three papers in different directions. A paper by Mehta et al on using interactive chat in a Minecraft environment to guide agents to build things better. What can we do to help them? And the other one by Coda-Forno et al. looks at inducing “anxiety” in GPT 3.5 (it’s already anxious!) and how that affects output: “Crucially, GPT-3.5 shows a strong increase in biases when prompted with anxiety-inducing text. Thus, it is likely that how prompts are communicated to large language models has a strong influence on their behavior in applied settings.” Chen finds that people prefer dialogue agents that are extroverted, open, conscientious, agreeable, and non-neurotic. Surprise!

“Multi-Party Chat: Conversational Agents in Group Settings with Humans and Models,” which features training on a new dataset of role-playing character interactions (to be released) (from Meta). I wonder if Sheldon is in here too.

I had a handful of other NPC agent frameworks/papers, and a ton of games links, the mid-month #41.5.

The Co-Author interface code, for human-LLM creative interactions. Paper and related dataset materials.

“A decomposition of book structure through ousiometric fluctuations in cumulative word-time,” by Fudolig et al (thanks Ted). I put this here so I can show off this insane yet compelling diagram which is looking back at you. Their thesis is that there are cyclic patterns of “danger” in longer fiction works.

Data Science Stuff

Slides from Leland McInnes of UMAP fame on use of the ThisNotThat lib for making interactive exploration of clusterings. It looks like Ian Johnson/enjalot is making something like this too?

Observable Plot has a spiffy new website!

Awesome Svelte and D3 by Sebastian Lammers.

NLP

AI playground for comparing LLM models, another one. Useful! I tried the new Open Assistant, the effort to develop an open-source ChatGPT model, against GPT 3.5 (not even ChatGPT or GPT4). I was disappointed by its current state. It bullshitted when asked what “cli-fi” is, didn’t explain what silver labeling in NLP refers to, and can’t do haiku poems. I mean, GPT3.5 does bad ones, but understands the instructions. It got the others right too.

I do not have access to Bard yet, so no comment on it’s amazing skillz. Nor the new coding GPT.

Millions of Wikipedia articles in many languages in embeddings from Cohere (text, vectors, metadata).

Phonetic Word Embedding Suite (PWESuite). Handles: correlation with human sound similarity judgement, nearest neighbour retrieval, rhyme detection, cognate detection, sound analogies.

Is ChatGPT a Good Sentiment Analyzer? A Preliminary Study by Wang et al. “ChatGPT exhibits impressive zero-shot performance in sentiment classification tasks and can rival fine-tuned BERT, although it falls slightly behind the domain-specific fully supervised SOTA models.” Few-shot prompting can improve it. ChatGPT is better at polarity shift (e.g. negation) than BERT.

🚀 Scaling Transformer to 1M tokens and beyond with RMT: “By leveraging the Recurrent Memory Transformer architecture, we have successfully increased the model's effective context length to an unprecedented two million tokens, while maintaining high memory retrieval accuracy.” This paper caused a lot of head explosions, because this context is bigger than many book series. It will open the door to much longer and smarter text generation problems. Also, meanwhile, see LongForm models and datasets.

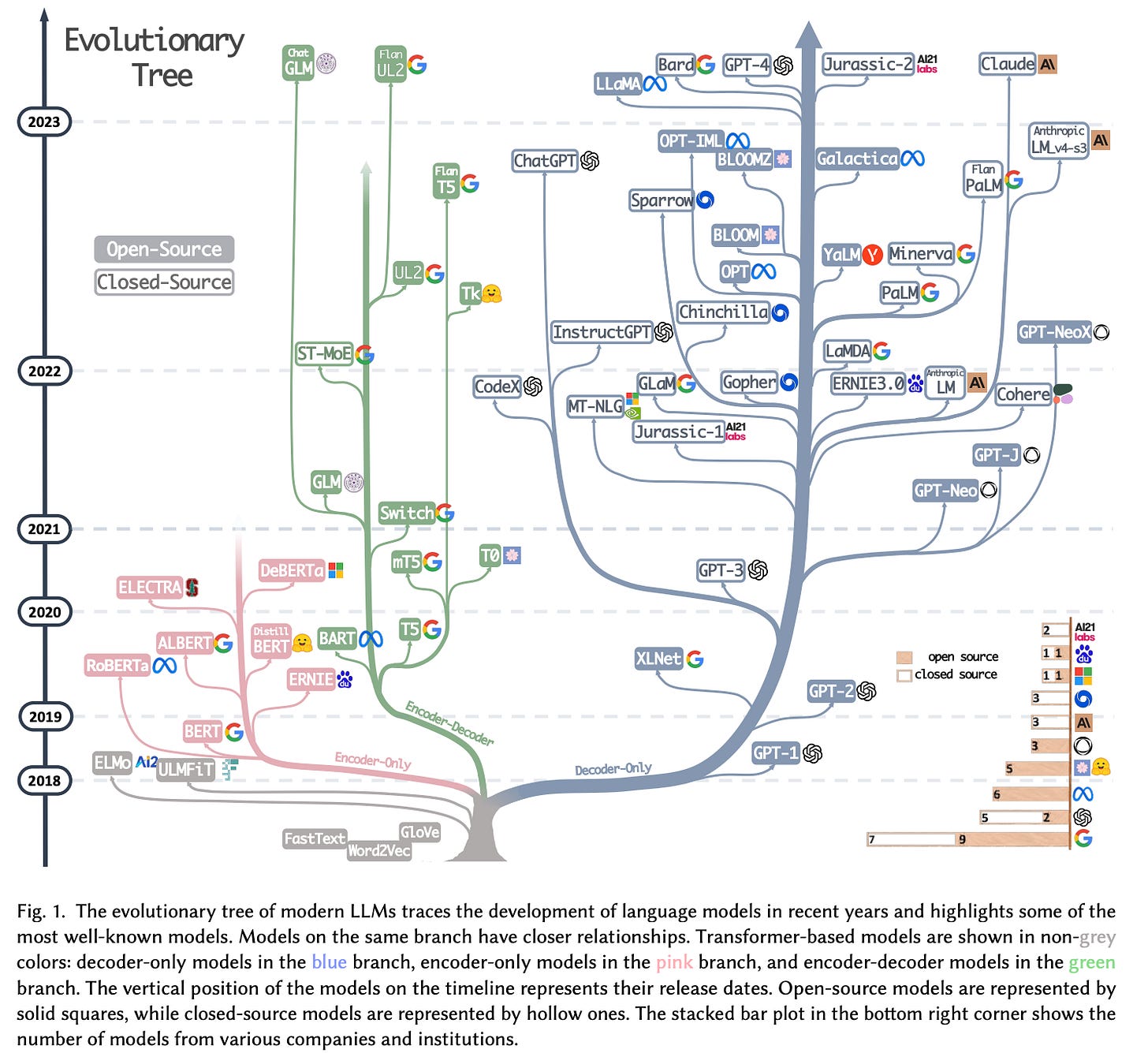

🎓 Harnessing the Power of LLMs in Practice: A Survey on ChatGPT and Beyond, the paper with the tree diagram of evolution that Yann LeCun clarifies here:

What's called "encoder only" actually has an encoder and a decoder (just not an auto-regressive decoder). What's called "encoder-decoder" really means "encoder with auto-regressive decoder." What's called "decoder only" really means "auto-regressive encoder-decoder."

🎓 A CookBook of Self-Supervised Learning.

🎓 Seb Raschka updated his “Understanding Large Language Models” article.

🎓 Yoav Goldberg’s lovely article on Reinforcement Learning for LLMs (or “rlhf”). Why not just supervised learning… and note he focuses not on creative tasks but getting things right.

Books

There were a few repeated themes this month: Western feels, embedded surveillance tech, women figuring out who they are. A ton of great reads.

Camp Zero, by Michelle Min Sterling (sf). “Cli-fi”, or climate-oriented science fiction, where the Wild West is the northern wastes of Canada. This is a feminist (dys)topia, kind of. The story has two main focal views: A group of prostitutes servicing “clients” building a “campus” in a Canadian ghost town, with the main character a Korean-American woman undercover for her pimp CEO boss who invented an embedded computing tech; and an all-women army group setting up their own community on a base in the north. Lots of class, race, gender, inequality, and abusive tech here. I loved it, it’s beautiful writing, but will not be to all tastes.

Poster Girl, Veronica Roth (sf). Really well-done character setup and execution here. The remnant loyalists of an overthrown totalitarian government live in a guarded, starving prison ghetto. The former cult-y regime scored everyone’s behavior based on constant observation via implanted network devices. Their propaganda “poster girl” is offered release if she tracks down a child “re-homed” by the now dead regime leaders, among them her father. She’s not convinced the new government is better. (CW: suicide.)

Daughter of Doctor Moreau, Silvia Moreno-Garcia (fantasy). Here the Wild West is a remote Mexican hacienda, where Dr. Moreau is doing experiments on animals, and a lot of men are awful. The doctor’s daughter, who grew up with some of the hybrids, has to figure out who she wants to be. There is romance.

The Strange, Nathan Ballingrud (sf). The Wild West is Mars, with weird mining colonies and mushrooms (alert, Annihilation fans). There is a mineral, “the strange,” mined in the wilderness, and needed to help robot servants become sentient. Earth has dropped out of touch, crime is on the rise, and a tough teen girl chases bandits to find a stolen video of her lost mother. True Grit on Mars.

⭐️ Lone Women, Victor LaValle (fantasy). The Wild West is really the Wild West, with women homesteaders. Our main character is a black woman traveling with a heavy crate, trying to escape a family tragedy in the wilderness. The difficulties of settling in a remote northern town, with suspicious do-gooders and bad guys and soon strange deaths… I LOVED this. CW: violence (Victor writes horror).

Assassin of Reality, by Marina Dyachenko, Sergey Dyachenko (fantasy). This is the sequel to Ukrainian fantasy Vita Nostra about a linguistic/philosophical magic school, which I loved and some hated. There is more existential study and rebellion in this one, as Sasha tries to fight her advisor’s and teachers’ responses to her power as “the password,” a construct outside the grammatical structures that govern the rest of the magic system. More horrible deaths, many possible universes, but especially trigger warnings for school exam situations. Diagrams of possibility come alive and perceive YOU drawing them. The first book was more structured; this one has fewer humiliations.

Just One Damned Thing After Another by Jodi Taylor (sf). Not a bad time travel humor romp? Back to the dinosaur age, with lots of tea. The idea of historians being so hard-hitting and fit made me laugh (sorry, historians).

Game Recs

No TV, because I’m still rewatching all of Stargate SG-1 and it has 10 seasons (I’m on 6). I reached an ending in Betrayal at Club Low, I deem it a funny albeit not very long game; the dice-pizza apparatus was fine and not too complicated in use.

Still obsessively playing No Man’s Sky in VR. I’m trying to figure out why and what it’s doing for me, despite some grind and content issues. I’ve been under work pressure with reviews I can’t control, and I think NMS is offering a world where I can set the agenda and control things for myself.

It has visual tourism I appreciate — I mean, the stained glass world was really jaw-dropping, next level. And that one was refreshingly empty of space junk, while most planets are littered with stuff to pick up or unbury. It’s a junky universe, even though it feels a lot like the Wild West with aliens. Like in real life solo travel, I can decide to detour and check out local (alien) ruins at any time. They are a bit repetitive, it’s true. Knowledge Stones are frustratingly slow to deliver — a word at a time — but I do appreciate that my ability to communicate is improving over time. In real life, I learn French expressions from Instagram at night, so how different is this? 🤷♀️

I’m unhappy that there is a capitalist technology story about strip mining mineral resources to build or buy better tech toys (better shields! a personal stargate!), and almost every alien encounter is about sales. I guess most crafting games are like this. I don’t usually love them, but my motivation to see the universe more efficiently is winning here. I try to cover the holes I strip mine, especially where my base is, because even if I live in a shack on a shitty ice planet, it’s MY shitty ice planet! I spend a lot time walking. If only it actually burned calories IRL. I accidentally ran over some animals once and I still feel nervous when I drive; I was promised a more efficient jetpack if I saved up minerals, wasn’t I?!

I can’t convey the look of these worlds in a tiny picture, but I take a lot of snaps.

When the achievement music ramps up and a sign appears in the sky, like a death notice in Hunger Games, only it’s for “Arnicas, Cataloguer of Minerals” or “Traveller Who Walked 1000 <whatevers>” — I smile. I like this.

Poem: Sanctuary

Suppose it’s easy to slip into another’s green skin, bury yourself in leaves and wait for a breaking, a breaking open, a breaking out. I have, before, been tricked into believing I could be both an I and the world. The great eye of the world is both gaze and gloss. To be swallowed by being seen. A dream. To be made whole by being not a witness, but witnessed. --Ada Limón

Ada Limón is continuing her stint as Poet Laureate of the US. I dug into the archives and enjoyed this Ezra Klein interview with her, especially when they discuss moving away from cities and still being happy and productive.

In two weeks I can hopefully share an AI art poetry project I worked on, stay tuned.

Best, Lynn (@arnicas on twitter, mastodon, and now bluesky, god help me)

informative news letter

Wow! You covered so many topics in here but it all flows well and the poetry at the end knocks it out of the park!

Ousiometric is something I never heard of before. Anything out there on how it relates with pure semiotics?

In terms of using ousiometrics for generative plot - I think it'd be cool to visualize something that shows a timeline rather than the difficult to read pinwheel thing. There's also issues with 'theory of mind' and the 'arrow of time' with some of the COTS story generators I've toyed with before. Like with Sudowrite, I did an autogenerated sequence of chapters. Then in the actual text body, all the characters in the first chapter were talking about things they shouldn't know about until chapter 5 or later. Etc.

Great stuff!